#100 — The Whale kept shipping, GPT 4.5, Laravel builds an IaaS, Git up, announcing a hiatus and more

Amazon's internal cleanup, vulnerability in the Todesktop, FireflySpace's moon landing, Ethereum's new leadership, Fast Inverse Square Root, Doom in Typescript & more

👋🏻 Welcome to the 💯th!

Yes! 100 consecutive weeks (~2 years)

btw The Nibble is taking a hiatus for now, taking the time to reflect on where we have come, and what we'd want to do differently and get back with even more vigor.

📰 Read #100 on Substack for the best formatting

🎧 You can also listen to the podcast version of Powered by NotebookLM

Now onto the edition…

What’s Happening 📰

🧹 Amazon went on an internal cleanup spree and shut down Chime (their in-house video conferencing app) and also Amazon’s Android App Store (you might have seen one in FireTV). Must be good for employees to see those Jira Boards go One to Zero.

🐛 Todesktop, the Electron app bundler to turn your web app into a cross-platform desktop app had a vulnerability (now patched) using which anyone could push arbitrary updates to popular applications such as Clickup, Cursor, Linear, and Notion Calendar!

In short, Todesktop’s build container stored decryptable secrets and had a post-install script execution vulnerability that allowed gaining access to those secrets and using them to push updates to any application.🌖 FireflySpace’s BlueGhost on March 2nd becomes the first commercial company to touchdown on the moon. It has sent a postcard from the Moon.

Source: Firefly_Space’s Tweet

✨ AGI Digest

🗃️ Open Source keeps winning

🐳 The Whale Continues Shipping into the week and even surprised us with an extra day 6 release. Oh and they also announced off-peak hours discounts, slashing API costs upto 75%.

On Day 3, they released DeepGEMM, an FP8 GEMM (General Matrix Multiplication) library for both dense and MoE GEMMs, which they use for training and inference of their V3/R1 models. This library has a minimal dependency and achieves 1350+ FP8 TFLOPS on Hopper GPUs.

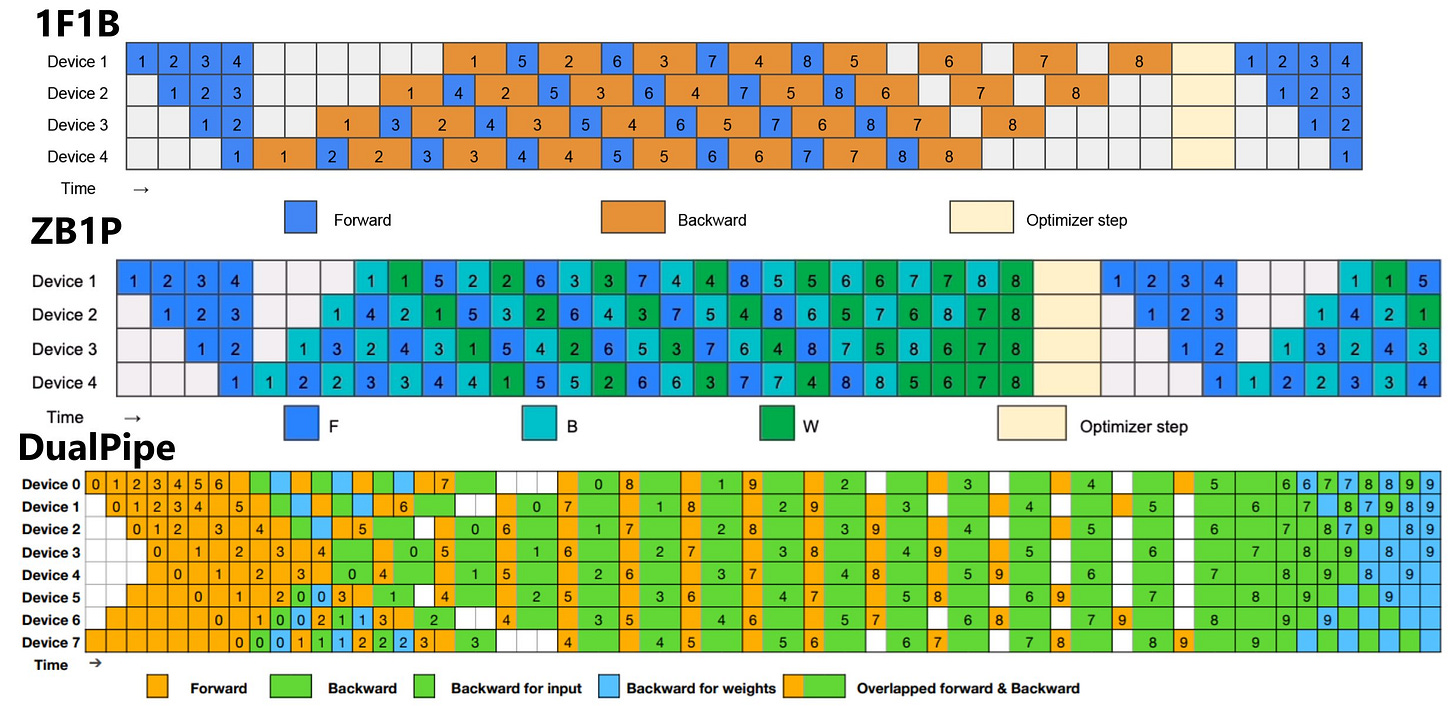

On Day 4, they released DualPipe, a bidirectional pipeline parallelism algorithm for computation-communication overlap when training LLMs and Expert Parallelism Load Balancer (EPLB), which introduces a redundant experts strategy that duplicates heavy-loaded experts.

And it just keeps getting better. On Day 5 they dropped a literal filesystem called 3FS (Fire-Flyer File System), a parallel file system that utilizes the full bandwidth of modern SSDs and RDMA networks. This system combines the throughput of thousands of SSDs and the network bandwidth of hundreds of storage nodes, enabling applications to access storage resources in a locality-oblivious manner and a cost-effective alternative to DRAM-based caching, offering high throughput and significantly larger capacity.

And we got a bonus Day 6 “one more thing” moment, we got a look over their inference system, which can serve 73.7k/14.8k input/output tokens per second per H800 node with a cost profit margin of a whopping 545%! What’s crazy is that they are using the H800 chip, which they are using in their inference, the lower bandwidth version of Nvidia’s standard H100, to limit exports to Chinese markets. Imagine what could be done had they had access to the newer and much more advanced ones.

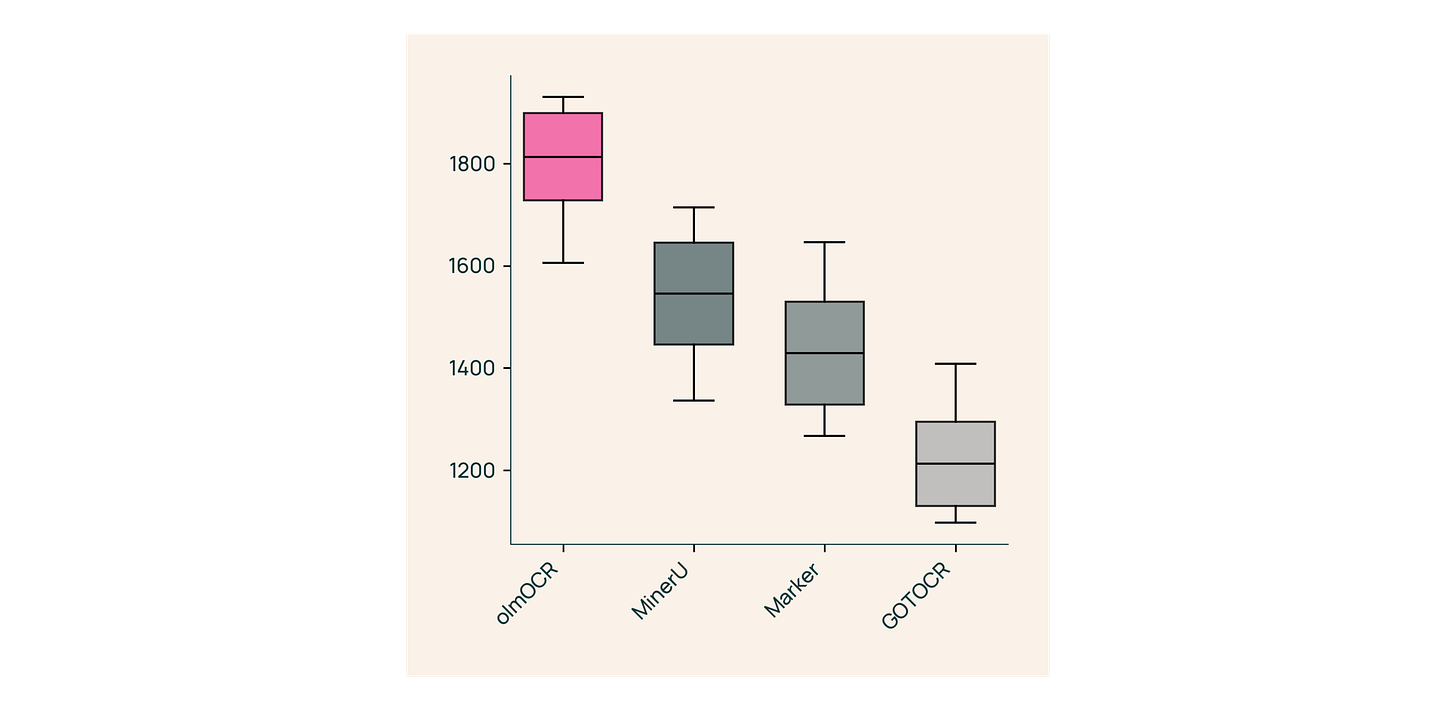

👀 AllenAI released olmOCR, an open-source tool to extract clean plain text from PDFs. It can process one million PDF pages for about $190, which is roughly 1/32 the price to do the same with GPT-4o APIs in batch mode.

Their secret sauce? They used GPT4o to generate detailed PDF to Markdown samples using what they call “document anchoring” which leverages metadata and images in the PDF to improve the quality of extraction and they use this to finetune a Qwen2-VL-7B-Instruct to arrive at the olmoOCR model.

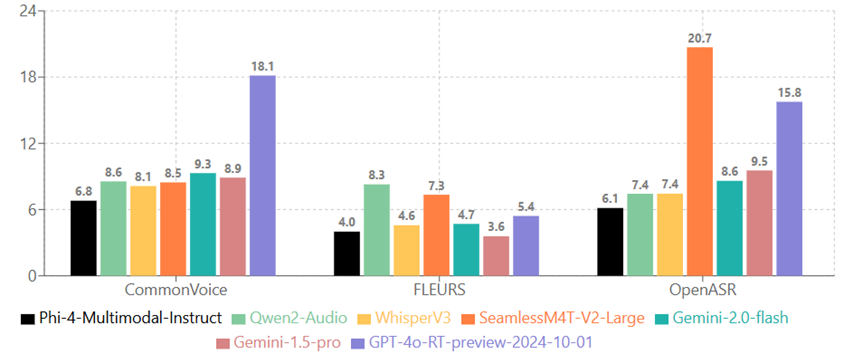

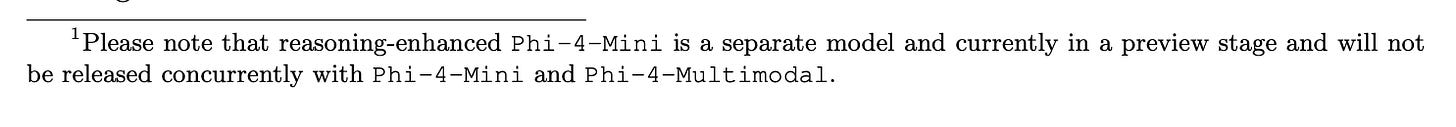

🎤 Microsoft’s Phi-4 Mini got an update with the Phi-4 mini Instruct (3.8B) and Phi-4 Multimodal Instruct (5.6B) models, both with MIT license, the latter with audio (in 23 different languages) and image input support.

The multimodal model is #1 on the Huggingface OpenASR leaderboard for speech recognition (better than OpenAI’s Whisper). They achieve these multimodal improvements over the text-only model without full retraining using a “Mixture of LoRA” to use modality-specific routers to allow multiple inference modes combining various modalities without interference.

Plus, we might be getting a reasoning model from the team pretty soon too 🤞

🎙️ Voice Models Galore

✍️ Talking of beating Whisper, ElevenLabs launched Scribe, a really accurate Speech to Text outperforming Geminin 2.0 Flash and Whisper v3 (in English).

Scribe can transcribe speeches in 99 languages, with word-level timestamps, and even speaker diarization. Though for $0.4 per hour of input audio, you might also want to consider Gemini 2.0 Flash which can be a lot cheaper with minimal loss in accuracy.

📣 Sesame released a mindblowing dmeo of Conversational Speech Model (CSM) — one of the most expresisve voice voice audio demo from a language model that we have seem so far.

CSM comes in three sizes — a 1B tiny model with a 100M decoder, a small 3B model with a 250M decoder, and an 8B model with a 300M decoder. The multimodal backbone processes the interleaved text and audio and the decoder reconstructs speech from it. And they’re saying this would be Open-Sourced with an Apache License!? It’s going to get crazy good.

📦 And some more miscellaneous announcements

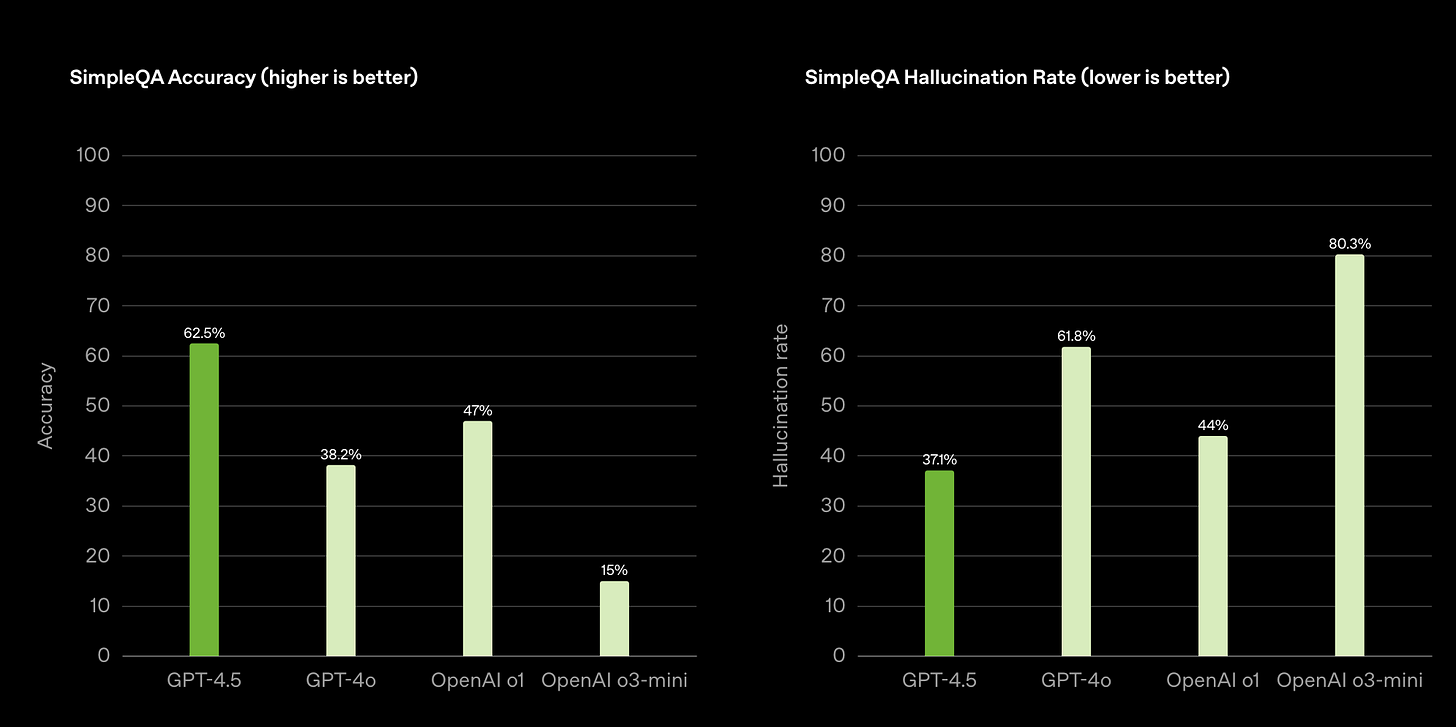

✍️ OpenAI released their "last non-chain-of-thought model", GPT-4.5 and people had a lots of mixed reactions. While it did top the Lmarena for a while (still #1 with style control though!), which means that people prefer its response in blind comparisons with other models on the questions asked on the arena, on Karpathy's questionnaire, most people could not differentiate GPT-4.5 with GPT-4 (not "high-taste testers", eh?), the model is good with understanding user intent and creative writing, like humor which btw is much harder to get right because we do not have an objective way to measure it, as we have for coding and maths. People seem to be having fun with its greentext1 generation. On real-world tasks like Coding and Agentic Capabilities, Sonnet 3.7 still rules though, and with the massive $75.00/M input and $150.00/M output tokens, you're better off not replacing it in your existing workflows until it makes a massive difference to you. Right now, you can test it on the Pro and Plus plans as well as on Perplexity Pro.

🌡️ Diffusion-based LLMs are having their moment right now. First, it was the LLaDA paper, and now we have Inception Labs announcing Mercury dLLM, which caught up with both the speed and quality of traditional dense LLMs very quickly. They announced two code-specific models — Mercury Mini and Mercury Small, which together achieve performance similar to the Gemini Flash 2.0 on various benchmarks.

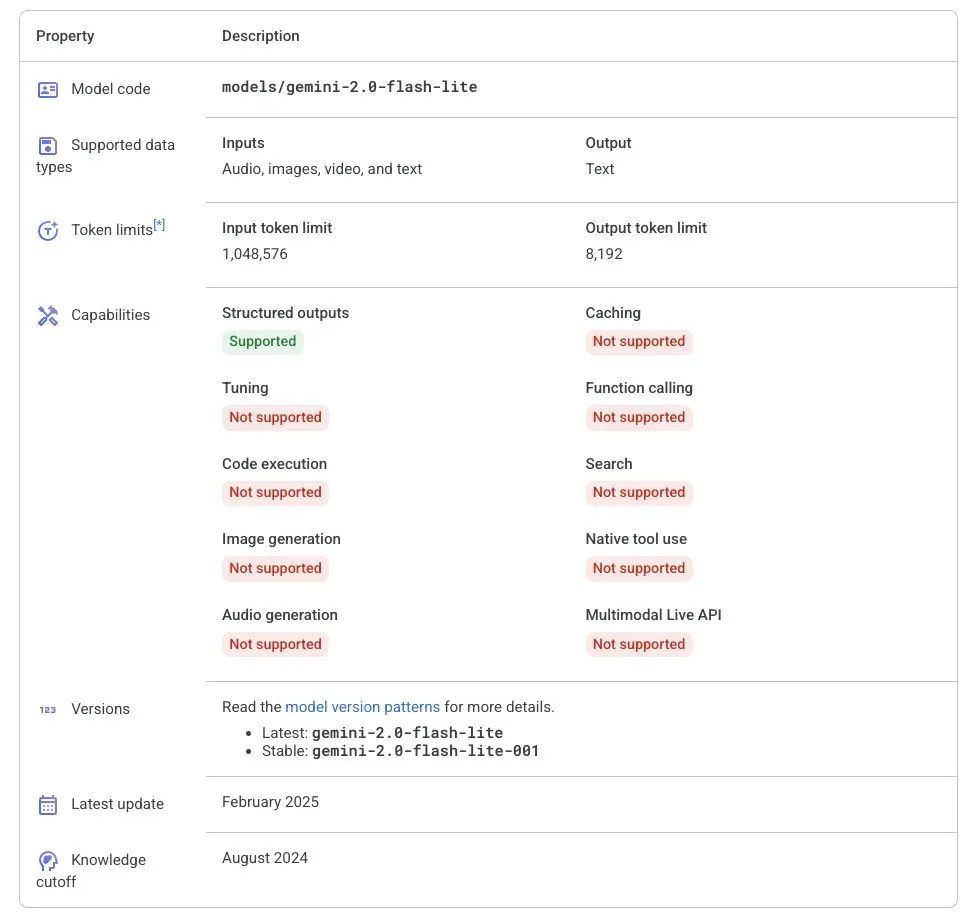

🔦 Deepmind’s Gemini 2.0 Flash Lite is now generally available making intelligence too cheap to measure. Like its elder sibling, it outperforms the previous 1.5 Flash models at multiple benchmarks at a fraction of the costs of the 2.0 Flash. Use it for quick, simple tasks such as translations or small summaries which do not require much intelligence. As always, you can try it out on AI Studio for free before integrating it into your apps.

💻 Following Anthropics’ Claude Code agentic coding tool, the Gemini Team released Gemini Code Assist with free 180K (what?) code completions per month on a context window of 128K. And they also have a GitHub app for PR reviews on your codebases natively on GitHub!

🔧 Anthropic quietly updates its API to support the OpenAI SDK using

https://api.anthropic.com/v1/as the base URL. Though it obviously does not provide 100% compatibility with all the SDK parameters, this is really cool to have because you can now use the single powerful SDK to call all of OpenAI, Anthropic, Google, DeepSeek, and Grok models. Anthropic folks also rolled out a beta feature flag in Sonnet 3.7 API to reduce tokens in tool use, saving on average 14%, and up to 70% in output tokens, which also reduces latency. You can provideanthropic-beta: token-efficient-tools-2025-02-19in the header to invoke this.🔊 Talking of Anthropic, the next version of Amazon Alexa, called Alexa+, will be powered by Anthropic Claude. Costing $19.99 and free for Prime members, Alexa+ will roll out to the US first and subsequently to other regions in the coming week and is said to be more conversational than its predecessor, as well as much more knowledgeable in its responses and better at controlling your home accessories.

🤑 With the traditional LLM benchmarks saturating, people are turning to test LLMs on tasks that feel much closer to what a human would actually do. Andon Labs’ Vending-Bench does something just like that by letting LLM agents manage a simulated vending machine business. The agents need to handle ordering, inventory management, and pricing over long-term horizons to successfully make money. Till now, two models, Claude 3.5 and o3-mini, are the only ones whose mean reward has beaten a human, but the variance is still too high, though. We also have an AI Trading Arena where LLMs manage a real portfolio, taking in market news and buying/selling stocks live in the market. In this portfolio as well, Sonnet 3.7 bags the highest profit.

🔐 0x Digest

👑 Ethereum Foundation brings new leadership as demanded by the community, welcoming two new co-Executive Directors to take care of the roadmap ahead.

They promoted Hsiao-Wei Wang, who was a researcher at EF for the last 7 years, and got Tomasz Stanczak from Nethermind for these roles.

✨ Dubai approves use of USDC and EURC in DIFC (Dubai International Financial Centre).

Dubai has a unique connection with Circle and cryptocurrencies in general. Its relaxed tax policies and crypto-friendly rules are just making it more and more friendly for crypto firms to take shelter there.

🛠️ Dev & Design Digest

😱 Truffle Security found ~12k live API keys and passwords in Common Crawl, the scraped data from the web which is used to pretrain a majority of LLMs today!

The 400TB of data (which is approximately equivalent to ~3640 complete copies of the entire Wikipedia) contained live secrets in over 2.7 million web pages. Most of the secrets appeared multiple times, with one WalkScore API key appearing 57k times across 1.8 million subdomains! The lessons for us are to always store your secrets encrypted and never hardcode them into your apps.

🆕 TypeScript 5.8 is now out, with better checks on return types, support for require(esm) and star of the show being a new flag

--erasableSyntaxOnly, which forbids the use of syntax that can’t be erased when stripping types.

This new flag, also says “I gotchu” back to NodeJS’s —strip-types, making it a closed and compatible ecosystem.

🚀 Laravel launched its own IaaS platform, Laravel Cloud, to deploy Laravel apps in under 1 minute. The PHP framework raised $57M Series A in Dec 2024, and this almost feels like the natural next step at this point. (because we do the same thing in JS Land).

They went over and above and added some Starter Kits, VS Code Extension, and whatever a developer might need to get started.

🦕 Deno shipped v2.2 mid last month with built-in OpenTelemetry support, faster litter, precise benchmarking, smaller bundling and what not.

Nayr Ryan Inc. has been really moving faster than Jared & Co. at Bun, they look closer to stable in the last 3 versions than Bun as a whole. Maybe I know what my side-projects are gonna use.

🤝 You have read ~50% of Nibble, the following section brings some fun stuff and tools out from the wild.

What Brings Us T(w)o Awe 😳

√ One of the things that the legendary John Carmack did (along with single-handedly inspiring the world of 3D games) popularized the Fast Inverse Square Root algorithm incorporating clever bit manipulation tricks and some Newton’s method.

🤯 We probably saw the craziest project, you’ll encounter in a decade, Michigan TypeScript built the Doom engine running purely in TypeScript types. Yes, he rendered the Doom frame in the process and ended up building a VM, compiler, and whatnot. And before you ask, AI Code Generators couldn’t help him a little bit with these efforts, making this a year-long journey of determination.

🤯 Talking about determination and crazy shit, serial experimenter Nolen strikes again, this time arranging 240 browser tabs in a tight 8x30 grid and using favicons for playing Pong. In this article, he explains how he keeps doing unimaginable feats in great detail.

🪄 CSS Wizard Josh Comeau came up with a new course Whimsical Animations and as always a state-of-the-art website. In a blog, he deconstructs the “Whimsical Animations” landing page’s million little secrets and CSS tips like always. If you are afraid of CSS, read this post and feel the love this man has for details in animation.

What we have been trying 🔖

🛫 Flight Price Predictor: Airtrackbot’s AI-powered flight price predictor helps you decide whether to book tickets now or wait a bit.

🐐 Scott Chacon shares and talks about How Core Git Developers Configure Git. Most of us take

gitconfigfor granted, and don’t customize it to the fullest once installed, Scott shares a config that even Core developers working on Git use. He explains each setting in that his configuration urges you to customize it. (We really expect a better experience after modifying these attributes).🧑💻 Warp: The AI-native terminal interface with a relatively cleaner user interface and other options (if you are looking to migrate from iTerm2 and for some reason don’t want ghostty). Also, they just released a Windows version last week, if you have a Windows Machine, you probably don’t have as nice options as this.

Builders’ Nest 🛠️

🍘 JSON Crack: Format JSON and transform it into a readable graph.

🫦 React Bits: An open-source collection of high-quality, animated, interactive & fully customizable React components.

⚒️ Smithery: A platform with a list of popular MCP Servers to use with your AI apps, like Cursor

🧮 Corca: A collaborative Math editor to work on equations on a computer intuitively, unlike LaTeX for which you need to learn a special syntax and which has a learning curve

Meme of The Week 😌

Off-topic Reads/Watches 🧗

🕊️ Nobody Profits by George Hotz, where this 10x dev urges us not to let scammers monetize AI like they did with the Internet and let's put all our might to "build technology and open source software that market breaks everything" and making AI deliver huge value but no profit to anyone.

🚀 Being a High-Leverage Generalist by Char emphasizes that rather than competing (with both humans and AIs) in specialized, narrow, crowded fields, it is much more valuable for you to be a high-leverage generalist and apply your knowledge strategically to connect ideas across disciplines, adapt to new challenges, and maintain optionality in their careers.

🎯 Joe Lonsdale explains Theil’s “One Thing” rule and discusses how the focus has a convex output curve.

🪤 Trapped by

, where she goes through what triggers our flight-or-fight responses and how to pause and plan to remain calm instead (It includes some good relatable stories, too).

Wisdom Bits 👀

“Any success takes one in a row. Do one thing well, then another. Once, then once more. Over and over until the end, then it's one in a row again.”

— Matthew McConaughey

Wallpaper of The Week 🌁

🌌 Grab the week’s wallpaper at wow.nibbles.dev.

Weekly Standup 🫠

Nibbler A had a review, fix, and build on the side week. He played lots of chess to cope, and his weekly physical activity has been at an all-time low. On the weekend he spent 30 mins making a little tool for himself.

Nibbler P pushed to prod, watched some 🧛♂️ stuff, successfully drove away Mongols from Feudal Japan, and went on a long drive with his family.

If you have two minutes, please fill out a feedback form here. Your input will help us improve “The Nibble” for you and other readers!

a style of writing and communication that originated on 4chan