#84

LLM Search, Small model FTW, OpenAI Predicted Outputs, Fault Proofs, Polygon pivot, Better JSON, Contactless Crypto and more

👋🏻 Welcome to the 84th!

We had 100s of tabs open this time, wrote and churned a lot of content to bring this one to your inboxes.

🗽 While America decides which pill to swallow (UPDATE: they chose red), let’s dive in, dear Neo.

📰 Read #84 on Substack for the best formatting

🎧 You can also listen to the podcast version of it on NotebookLM (it’s really awesome!)

What’s happening 📰

✨ AGI Digest

🔎 LLM-Assisted Search goes big this week

🕵 After testing its Search use cases using the SearchGPT prototype for some time, OpenAI released ChatGPT Search, a way to integrate information from the web with links to relevant sources when answering a user’s query. OpenAI’s recent partnership spree has also brought in some data providers to show things like weather, stocks, sports, news, and maps with much more precision and better design. It also has a Chrome extension which makes ChatGPT the default omnibar search engine in the browser.

Internally, it uses a fine-tuned version of GPT-4o, post-trained on synthetic data, including distilling outputs from OpenAI o1-preview (always good to see companies using their products).🕳️ And the very same day, Google announced that the Gemini API and Google AI Studio both rolled out “Grounding with Google Search” which integrates Google search results in the Gemini’s context window before generating a response to reduce hallucinations and improves factual accuracy. While it is free in the AI Studio, it costs $35 per 1,000 grounded queries in the API and can be enabled using the 'google_search_retrieval' tool which indicates it’s essentially a tool call + RAG in the background. What’s interesting though is that the API also supports ‘Dynamic Retrieval’ which assigns a score to the user’s input to determine if grounding is needed or not, thus reducing unnecessary calls where Grounding probably was not needed.

🚢 Anthropic didn’t stop shipping this week as well

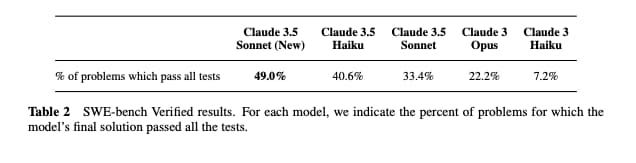

🪶 Kicking off the week on Monday, we got the new Claude 3.5 Haiku which shows quite a good performance on coding benchmarks and agentic abilities, even outperforming the old 3.5 Sonnet and 3 Opus on some tasks. That being said, it also seems like they have changed the model architecture a little leading to decreased response throughput and also a 4x increase in API pricing compared to the 3 Haiku. So if you need a cheap, fast, and intelligent general-purpose model, you’re still better off with the GPT4o-mini or Gemini-1.5-Flash for now.

Source: Claude 3 Family Model Card 🏞️ The Claude App can view Images in PDFs, in addition to text inputs. You can turn on the feature preview by going to https://claude.ai/new?fp=1. In addition, the new Claude 3.5 Sonnet API natively supports sending PDFs in API response.

🎫 Anthropic finally, finally made counting tokens for the Claude 3 family easier by introducing the free Token Counting API. Previously, there was no way to do it except to make the actual model call and check the response, lol.

⚓️ Model Drops:

📱 Facebook released MobileLLM, a family of really small (125M, 350M, 600M, 1B, and 1.5B) models that achieve SoTA performance in the 125M/350M range. The team used deep and thin architectures coupled with embedding sharing and grouped-query attention mechanisms to get the last bits of improvements in these LLMs which are fit enough to run on small edge devices.

🤏 Talking of small LLMs, HuggingFace released SmolLM2 this week with an Apache 2.0 license and excellent performance in the ~1B range (you know it’s actually good when they bring Qwen into the comparison and even beat it in several benchmarks). They also released a 125M and a 360M model along with it the team said they’d release a complete blog along with training scripts to complete the release. From the benchmarks, it looks like SmolLM2 excels in some regions while Facebook’s similarly-sized MobileLLM excels in some others.

Source: Tweet by @loubnabenallal1 🍪 And, AMD released OLMo, its first open-source LLM, based on Allen AI’s OLMo-1B model. The major difference between their and AllenAI’s model is that AMD pre-trained with less than half the tokens used for AllenAI’s OLMo-1B and they also did post-training alignment using SFT and DPO to enhance general reasoning, instruction-following, and chat capabilities. BTW this is also a demonstration of AMD’s capability in making GPU and associated software to make training models as easy as doing it on Nvidia cards.

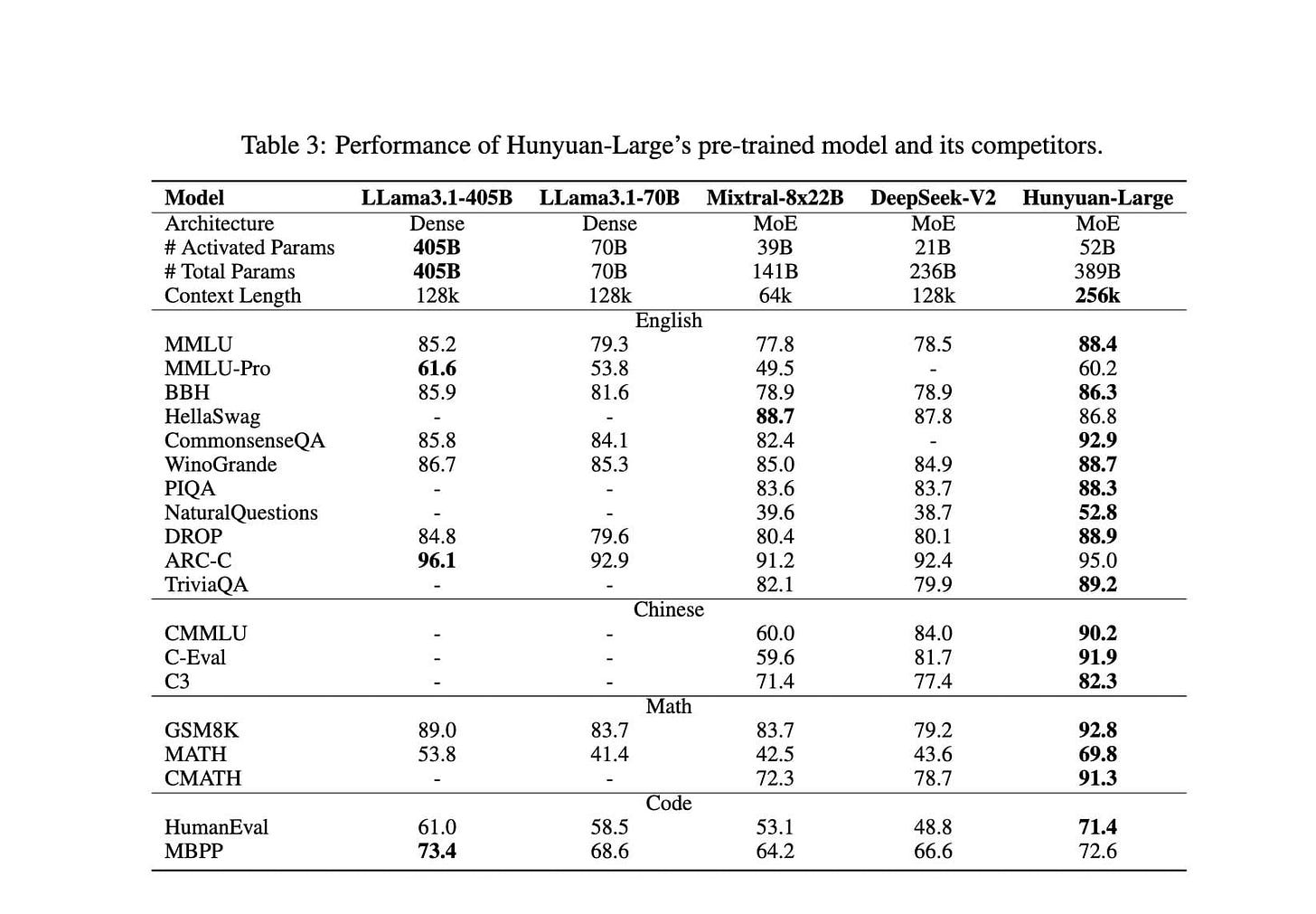

🕹️ Tencent released Hunyan Large, the largest open-source MoE model with 389B parameters which outperforms Llama-3.1-405B, Mixtral-8x22B, and DeepSeek-V2 on many benchmarks. It was trained on high-quality synthetic data and shows quite a strong performance in Maths and Coding.

Source: Hunyan Large Paper

🍱 Misc Announcements:

🔮 OpenAI rolled out Predicted Outputs for GPT4o and 4o-mini which uses Speculative Decoding (a first-time offering by any frontier-level LLM provider) to speed up output in case when the final output is not much different from the given input. Think of use cases like editing a code file to updating a document where there are only small changes to the file — those are the scenarios when this can lead to potential speed improvements.

🗣️ ElevenLabs and Andrew Huberman joined hands to dub HubermanLab’s content from English to Hindi and Spanish, clips of which are available on Huberman’s Clips Channel. And after listening to the Hindi Dub, we got to say that it is really impressive! Most other Hindi dubs tend to use pure Hindi words that are more often than not, not in colloquial use. What ElevenLabs did though is very impressive as it keeps it realistic and in line with the Hindi that’s actually spoken by the regular people these days.

🐼 After teasing it for some days, fal.ai and Recraft announced Recraft v3, aka Red Panda Text to Image Generation model and it is currently the highest-ranked model on the Artificial Analysis’s Text to Image Leadearboard, scoring even above Flux 1.1 Pro. And, it can also natively produce vectorized images, which is a first for general T2I models.

📝 Grok is now accessible via a Public API at console.x.ai with $25 of free API credits per month until the end of the year for everybody to test the API. Currently, the API only as their

grok-betamodel that costs $5/M input tokens and $15/M output tokens. This model is still under active development though and is likely to get multimodal support soon.

🔐 0x Digest

🤝🏻 Fault proofs are now live on Base Mainnet. This is a big steppuhh, as Base has plenty of users and funds moving on it already, with Fraud proof it just became more secure as an L2. This is one of the key steps for them to move from Stage 0 to Stage 1 roll-up (shedding some training wheels), which they plan to reach completely in the next few months.

📦 Sandeep says that Polygon has shifted from GTM first to product/research first organization in the last 2 years. Polygon was criticized and applauded a lot publically for being GTM's first in the last few years, this changes the narrative of one of the biggest projects in crypto.

🌪️ A developer rebuilt Tornado Cash, the OG non-custodial Ethereum and ERC20 privacy solution based on zkSNARKs with updated versions of tools used underneath.

💳 An interesting proposal ERC-7798: Initializing contactless payment transactions from EVM wallets popped up last week. This proposal is still raw, but it’s in a good direction it can be the start of a new era making contactless, wallet-abstracted, efficient peer-to-peer payments using crypto. (a crypto VC got multiple orgasms while I was writing this sentence.)

🛠️ Dev & Design Digest

🖱️ Jake wrote about How should <selectedoption> work. This is one of the actively discussed HTML Standards as the major issue is right now it is proposed that content of <option> will be cloned as is to <selectedoption> but the frequency and depth of the cloned tree are not defined yet.

⛴️ Wasmer 5.0 is out bringing plenty of useful features for Wasm devs. They have added support for more backends: V8, Wasmi, and also WAMR. This in itself is a major unlock as with V8, Wasmi, and WebAssembly Micro Runtime (WAMR) devs can now run Wasm on iOS (They mention that help from Holochain made it possible).

Although they had JSCore backend support for a year, iOS caps the ability to use Wasm using JSCore.

Also, the Module deserialization is up to 50% faster (thanks to a few updates onrkyv).🥊 The JS DevTool ecosystem is healthier than ever with new and faster tools pushing the old ones. Here’s a good write-up by Lee on comparing it succinctly.

🗡️ The folks at ClickHouse have built a new powerful JSON data type aimed to replace the traditional way JSON data is stored in a typical OLAP columnar format, addressing its limitations and improving overall functionality.

What brings us to awe 😳

📏 You might have heard that “your blood vessels taken together add up to 100,000 km, enough to wrap Earth 2.5 times”. But have ever gotten it fact-checked? We Fell For The Oldest Lie On The Internet by Kurzgesagt

🌫️ The best way to hide something in a picture is to straight-away blacken, crop, or hide it out with something opaque. DO NOT TRUST BLURRING TO HIDE INFORMATION IN AN IMAGE.

⏱️ We knew time zones were confusing. But we didn’t know they were this weird. From +05:45 to half-an-hour DST timeshifts to DST transitions at -1 o’clock (yes, negative), you have the perfect recipe for wrecking a programmer’s life and yet, it’s all surprisingly manageable. To all of those who say software is easy, send them this article: Time management is a mess.

🤑 The 2x 16GBx256GB Mac minis cost $1$ cheaper than a single 32GBx512GB Mac mini, showing Apple's consistent pricing (no matter how absurd sometimes it might be). Is it worth saving the $1, though? Absolutely not! When it comes to unified memory, two 16GBs are inferior to a single 32GB, and if you think you just saved a dollar, you’ll regret it pretty soon.

Source: Tweet by @seatedro

Today I (we) Learnt 📑

🤔 In Arabic, there is no "aey" sound, so it does not have Fair. It'll either be Feer or Faar or Foor. (Shared by Munawar)

🤡 Have you ever felt like it’s easier to resolve others’ dilemmas or solve their problems? Well, because it is. It’s called Solomon's Paradox. It was named after King Solomon (yapper like us), who demonstrated wise judgment for others yet struggled in his personal life (us bro us).

👶🏻 In cases where babies are born over international waters or in regions without territorial rights (no man’s land?), the aircraft's registration nationality might come into play. For example, if it's Boeing, the baby will be German. [Source: Twitter]

🍾 Have you ever considered something as superior because it was more expensive than alternatives? Well, then you are not alone, we all do it, and this psychological phenomenon has the name “Chivas Regal effect”. And yes, they did this and found out it works, they just increased the price of their scotch and the sales started performing well.

🤝 You have read ~50% of Nibble, the following section brings tools out from the wild.

What we have been trying 🔖

🗺️ uMap lets you quickly build custom maps with OpenStreetMap’s background layers and integrate them into your website.

👨🏻💻 nandgame: Build a computer starting from basic components.

🤡 PDF to Brainrot: our favorite new way to read research papers and satisfy our dopamine addiction. Here’s what we got when we converted Solana’s Whitepaper in Brainrot.

🌐 LocalXpose: a reverse proxy that enables you to expose your localhost to the internet. Just loclx it.

Builders’ Nest 🛠️

🖥️ nvm-desktop: a desktop application that manages multiple Node versions in a visual interface.

👓 harper: A privacy-preserving grammar checker, faster than Grammarly, and less memory-consuming than LanguageTool.

⁉️ shell.how: Explain shell commands using next-generation autocomplete Fig.

📄 augraphy: Augmentation pipeline for rendering synthetic paper printing, faxing, scanning and copy machine processes

Meme of the week 😌

Off-topic reads/watches 🧗

⛽ Choose your fuel Wisely by Seth, talks about how sometimes we choose the wrong source of motivation or metric, which helps us ship things in time, but in the long run, we might run out of fuel if chosen wrong.

📿 The Quiet Art of Attention by Stormrider is about paying attention to the present, taking in small deliberate changes, and embracing the quiet and peaceful moments.

✍🏻 Writes and Write-nots by Paul Graham, where he thinks and writes about how technology (specifically AI) will eliminate the “okay writers” and will only leave the “good writers” and “can’t write” people. This is bad as “writing is thinking”.

🫂 Care Doesn’t Scale by Steven mulls over how despite the how much change scale can bring into the way we impact the world, there are some human experiences — caring for your kids, taking care of parents, tending to people with special needs — that one just cannot scale without changing the nature of the experience.

Wisdom Bits 👀

“People have a hard time letting go of their suffering. Out of a fear of the unknown, they prefer suffering that is familiar.”

— Thich Nhat Hanh

→ So, let it go.

Wallpaper of the week 🌁

🌌 Grab the week’s wallpaper at wow.nibbles.dev.

Weekly Standup 🫠

Nibbler A had a busy week catching up with friends, and family. Mostly wrapping up Diwali work. He is touching racquets regularly again (🤞🏻), reading up on the things he’s been avoiding for a few years, and plans to tick a few anime/movies off the list too.

Nibbler P was in a post-Diwali high this week gulping on all the sweets he could still find around him. He used the weekend to read a few tech stuff and catch up on some movies and anime from his watchlist.

If you liked what you just read, recommend us to a friend who’d love this too 👇🏻