#86

Inference-time scaling APIs, Agentic Editor, new model drops, Beam Chain, iAgent, Russia Crypto ban, Deno WASM and more

👋🏻 Welcome to the 86th!

So, we are back at kind of weekend shipping, probably next edition we will close this cycle and start shipping weekends (like good ol’ days).

📰 Read #86 on Substack for the best formatting

🎧 You can also listen to the podcast version of Powered by NotebookLM (a little glitchy and hallucinating though)

What’s happening 📰

✨ AGI Digest

🤔 Inference-time Scaling LLM APIs:

📑 Nous Research released the Forge Reasoning API which allows you to apply inference-time scaling to various models (like Nous Hermes 70B, and models from Anthropic, OpenAI and Google), dramatically improving their reasoning capabilities. The API integrates various LLM inferencing techniques in the reasoning layer, such as Mixture of Agents, Chain of Code, and Monte Carlo Tree Search to create a comprehensive system for enhanced reasoning capabilities. The API is currently in Beta and you can sign up for access here.

🎇 Fireworks released f1 and f1-mini which specialize in “compound reasoning” tasks that again employ inference-time scaling to make the model reason better. The details of the model are not described yet but going by their benchmarks, it beats the LLama 3.1 405B, GPT-4o and Claude 3.5 Sonnet on a lot of maths, coding and reasoning tasks. You can sign up for the beta access here.

🏄♂️ Codeium released WindSurf Editor, its AI-powered code editor (a fork of VS Code) with a “Cascade” feature that uses agentic capabilities called “flows” to not just do a RAG over your codebase and come up with a text-based solution in chat, but also interact with your codebase to manipulate your files, use the terminal to execute commands and give you a general overview of the changes. Think of Cursor but with a little more agentic capabilities.

🤝 AI Product Updates:

🌌 While everybody was expecting OpenAI to drop finally o1, we instead got:

💻 ChatGPT macOS app’s ability to now read content from external apps, currently supporting coding tools like VS Code, Xcode, TextEdit and Terminal to provide context-aware answers, currently available in beta for Plus and Team users in the app.

🎏 We also got o1-preview and o1-mini streaming API and open access to both models across all paid usage tiers.

🎨 Plus, GPT-4o got a new update, versioned

gpt-4o-2024-11-20, with enhanced creativity compared to the previous checkpoint. However, users have reported that its performance on MATH and Scientific benchmarks has decreased compared to the previous checkpoint while being way faster (~80 output tokens/s to ~180 tokens/s) indicating that OpenAI might have sneakily used a smaller model for it.

Source: Tweet by @adonis_singh

📣 Anthropic meanwhile introduced a new Prompt Improver in their console which improves your existing prompts using a mix of CoT, example standardization, example enrichment, rewriting and more. Plus, for those on the Claude Pro and Teams sub, they introduced the Google Docs Integration allowing you to directly import your Google Docs files into your conversations with Claude.

⚓️ Model and Datset Drops:

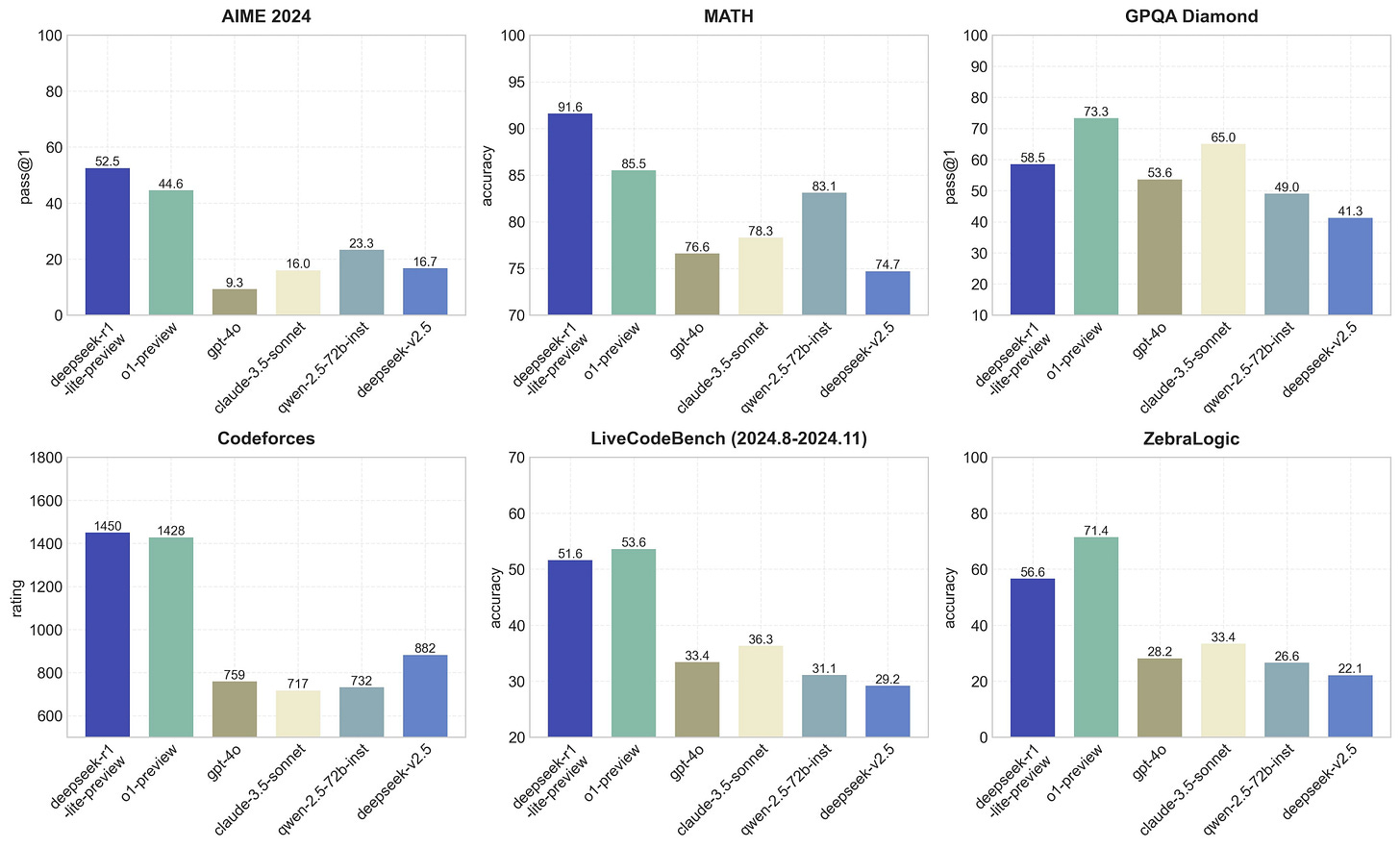

🐳 DeepSeek dropped the DeepSeek-R1-Lite-Preview, a reasoning model which competes with OpenAI’s o1-preview on reasoning tasks while possibly being a much smaller model. Plus, they show the complete reasoning tokens and would open-source the model along and an API release. Currently, you can try it for free at chat.deepseek.com with 50 free messages every day.

♊️ The Google-DeepMind team did two new Gemini experimental model releases, tussling with OpenAI on who takes the #1 rank on the Lmsys overall (without style control) benchmark. Both the releases boast of enhanced reasoning and coding capabilities though they tend to hallucinate more in some aspects as well. As of writing this post, the latest GPT-4o takes the first spot on the overall (with style control) benchmark, followed by o1-preview and the new Gemini-Exp-1121 takes the third spot followed by Claude 3.5 Sonnet. Though if you know anything about Lmsys, it is that you should most certainly test your own evals and intuitions over it.

⚡️ After the banger release of the Qwen2.5 and the Qwen2.5Coder family of models, Alibaba Qwenr released the Qwen-2.5-Turbo with a 1M context length (equivalent to 10 full-length novels, 150 hours of speech transcripts, or 30,000 lines of code). They also use sparse attention to increase the inference speed despite this massive context window. The model is currently only available via the Qwen APi.

🎐 Mistral released Pixtral Large and an updated Mistral Large 2, making both their weights available under the Mistral Research License and the Commercial license. The Pixtral Large beats the vision LLMs from many frontier labs on some benchmarks but if you’re looking for benchmarks, the Qwen2VL still outcompete it while having only ~58% of its parameters. Mistral also revamped its chat with web search, canvas support, better image and doc understanding, image generation (powered by Flux Pro) and faster responses via speculative editing. That’s a hell lot and available for free!

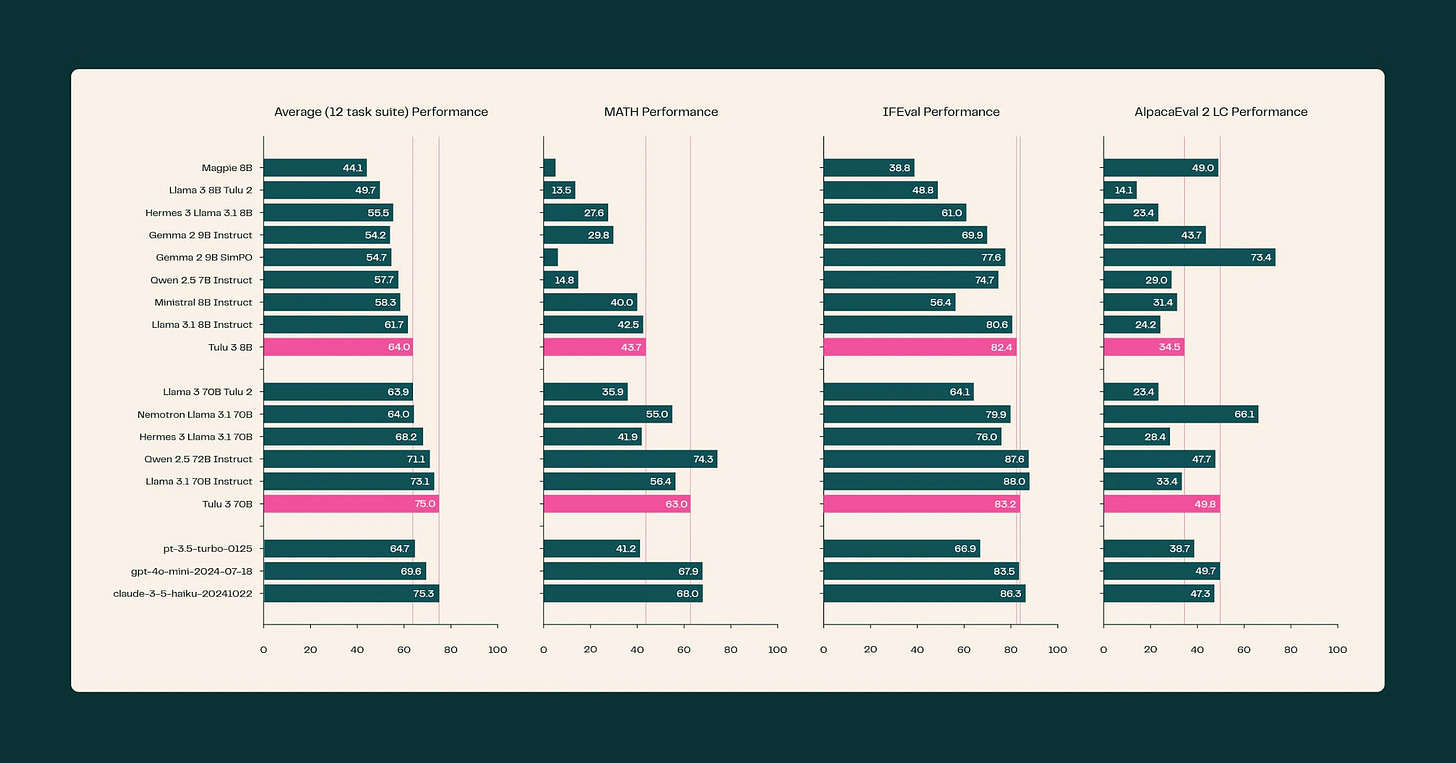

🗣️ AllenAI released Tülu 3, a family of SoTA 8B and 70B models with fully open data, eval code, and training algorithms. The models are finetuned versions of Llama-3.1 8B and 70B, with the 8B surpassing Qwen 2.5 7B and the 70B outperforming Qwen 2.5 72B Instruct, GPT-4o Mini, and Claude 3.5 Haiku on several benchmarks.

🤗 HuggingFace released the SmolTalk Dataset containing a massive 1M publicly available and synthetically generated samples of diverse tasks including text editing, rewriting, summarization, and reasoning. The same dataset was used for training their SmolLM v2 model and is available under the Apache 2.0 license.

🔬 The Jameel Clinic at MIT released Boltz-1, an OSS model for modelling complex biomolecular interactions, achieving AlphaFold3-level accuracy in predicting the 3D structure of biomolecular complexes. The source code is available on GitHub.

🔐 0x Digest

🔦 Ethereum Foundation’s Justin Drake proposed a major design overhaul and upgrade for the consensus layer (Beacon Chain) and they are calling it “Beam Chain”. The idea is to purge all the pre-merge tech debt, add (not force) some zk magic ✨, and make the chain go brr. For once, they might be building something that will “Matter”s (iykyk)

🤖 Injective launched an SDK called iAgent, that lets you easily build on-chain agents that can monitor markets and make on-chain trades for you. So, that you can sleep in this bull market too.

🇷🇺 In their master plan to slowly regulate crypto,

MotherAunt Russia now plans to restrict crypto mining activity in 13 regions to save up some electricity.▼ In the middle of “The Bull”, Sui managed to get a 2 hours downtime, as the chain was stalled, the root cause was some “bug in transaction scheduling logic that caused validators to crash”. This brought Sui’s token down by ~10% for a brief amount of time. (you know like our time on earth).

🏖️ RISC Zero introduced Kailua, a hybrid architecture that gives OP chains 1-hour finality without the higher cost of constant ZK proving. How? ah! The crux is “Only prove during disputes” 🤡

🛠️ Dev & Design Digest

🦕 Deno adds support to import Wasm (default instances for now, modules coming later).

🚀 OpenNext has launched their AWS adapter “opennextjs-aws”, which takes the NextJS build output and allows you to deploy it on AWS Lambda with “almost no-cold start”. This is a good move for Next’s Open (not Vercel-locked) future (doesn’t matter if it’s bad as a concept for the world).

🌐 Chrome controls more than half of the browser market right now and this started bothering DoJ; they think it’s

fatherlessmonopoly behavior. In an interesting turn of events, DoJ ended up asking the court for Google to force sell Chrome (for ~$20B or so), start revenue-sharing, stop promoting ads in search, and more fairy tales.

What brings us to awe 😳

🛤️ The Transit app wrote about how they are now leveraging vibration data (not that one, you sukebe) to locate underground trains when GPS is spotty. They send their engineers to travel (a lot) with all the trains in sight, record the vibrations from phones, and label them. Collecting and training over all that data now they can track your location in areas where cell networks or GPS are unreliable.

⚛️ Physicists at ETH Zürich have created the first fully mechanical qubit. Instead of 0s and 1s, qubits store data in a superposition of both states and traditionally this was hard to do as qubits are very unstable so for this experiment, the researchers built what they describe as a membrane similar to a drum skin that can hold information as a steady state, a vibrating state or a state that is both at once.

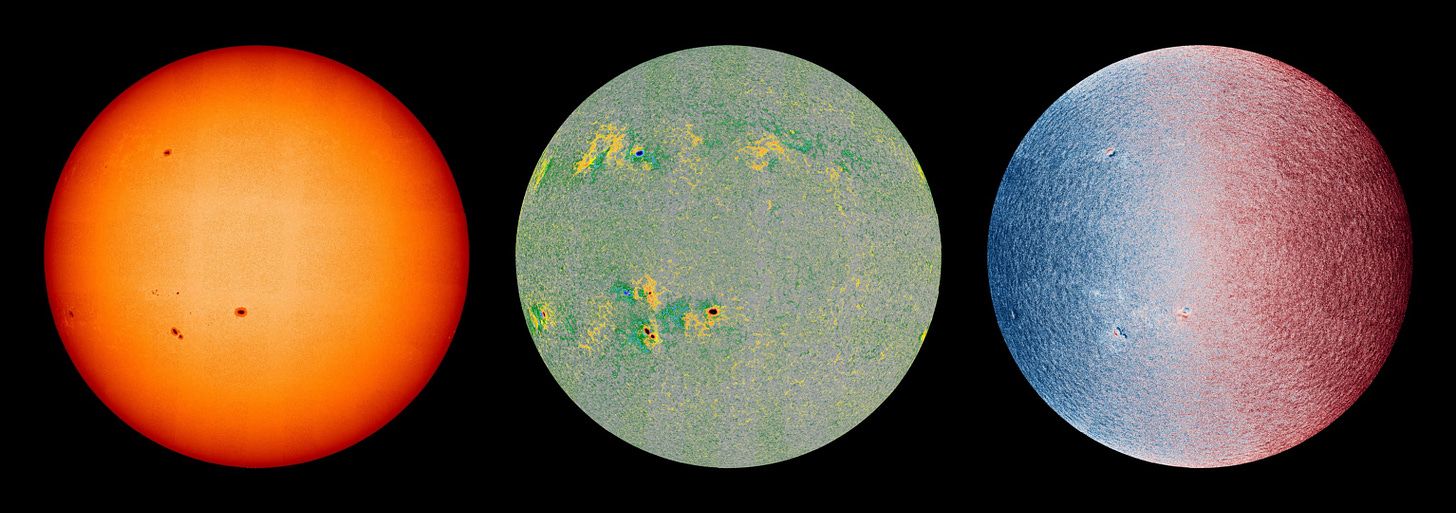

🌞 We got the highest-resolution-ever images of our Sun’s visible surface to date! The European Space Agency’s (ESA) Solar Orbiter Mission spacecraft captured multiple images at a distance of 46 million miles from the Sun. They stitched them together to create an image in which the sun’s diameter is 8k-pixel wide.

📊 Data is beautiful and you can create different stories from it the way you visualize it, so here’s an infographic showing a hundred different ways you can visualize the same dataset.

Today I (we) Learnt 📑

🙊 JWT is actually pronounced as the English word “jot”!

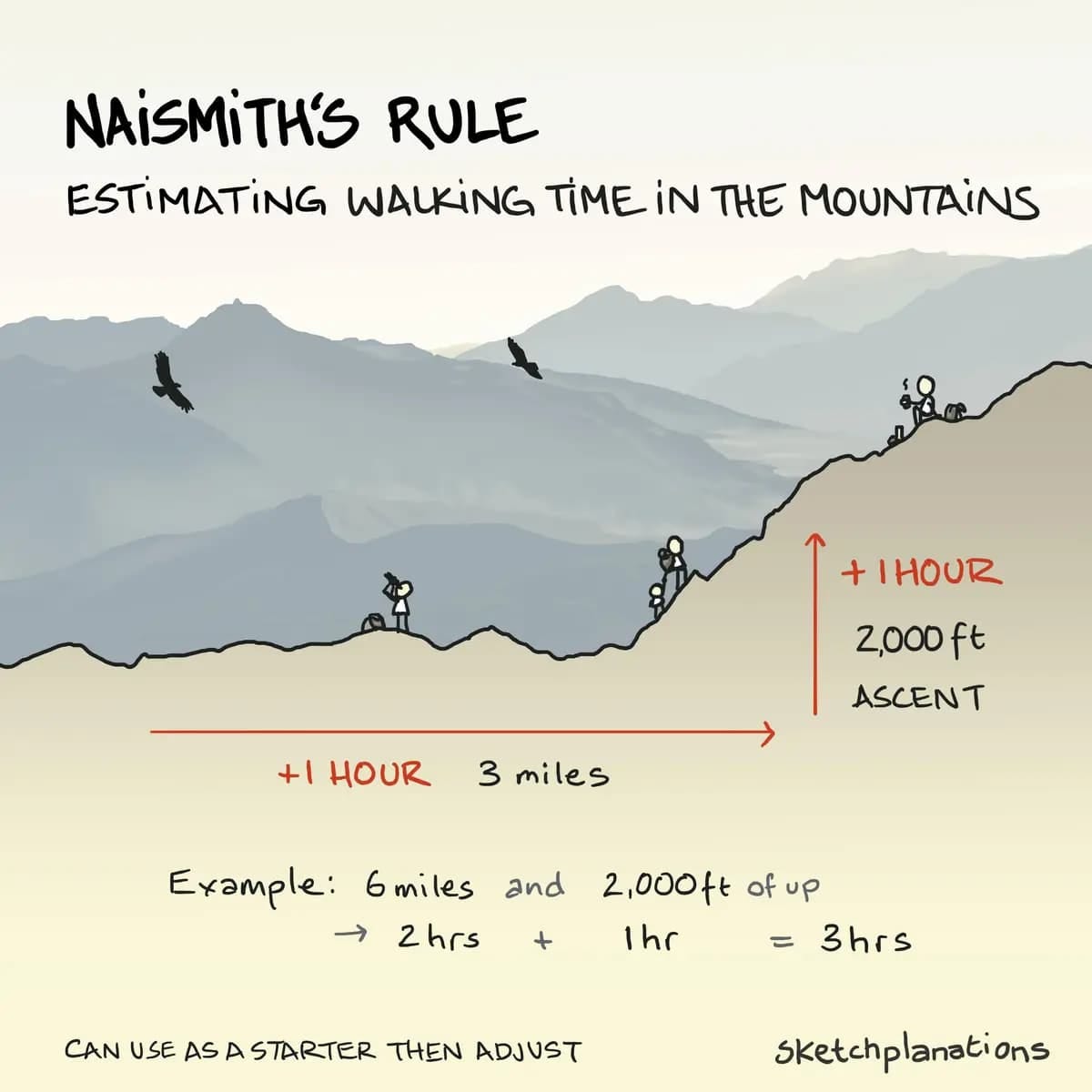

🥾 There’s a Naismith's Rule that you can use on your next hiking expedition to estimate the walking time. It goes like this:

It would take you ~1 hour for every 3 miles (5km) walked

And a +1 hour for every 2,000 ft (600m) of ascent

🔢 The Roman and Hindi numerals, both have a common way of representing some numbers, called the subtractive principle, whereby smaller values are subtracted from larger values to represent a complex number. For example, the Roman numeral four (4) can be written with IIII, but Roman uses a 1-minus-5 formulation in IV instead of that. Similarly, to say 19 Sanskrit/Hindi use unnīs (19) which is un (minus 1) + īs i.e. bīs (20), or untīs (29) as un (minus 1) + tīs (30).

⬇️ The Sanskrit/Hindi word Dakṣiṇa (दक्षिण) which means South is derived from the PIE-word dáćšinas that means “right side” because when facing East (considered auspicious in Hinduism because it is associated with the rising Sun), the right hand is facing towards the South. The word dáćšinas also gives way to the Latin word “dexter” which means right and hence the word “ambidextrous” which means somebody skillful in using both hands as they are their right hands.

🤝 You have read ~50% of Nibble, the following section brings tools out from the wild.

What we have been trying 🔖

🧑🏻🎨 HTML to React & Figma by Magic Patterns: Convert HTML from any page to React and/or Figma

۞ zinit: A flexible and fast ZSH plugin manager suggested to Nibbler P by Claude, which dramatically increased his shell loading times

🏖️ Stretch My Time Off: A tool that makes it easier to spread your holidays (depending on your location) throughout the year to create the most consecutive vacation periods.

✨ VSCode AI Toolkit: A toolkit to interact with both hosted and local models natively from the VSCode Interface.

Builders’ Nest 🛠️

🧰 modus: an open-source, serverless framework for building APIs powered by WebAssembly

🌐 browser-use: An easy way to connect AI agents with the browser

📋 The most used Open Source you didn't know about by @DeedyDas is a good searchable list of the most popular repos on GitHub.

💬 WikiChat: Reduce the hallucination of LLMs on general facts by retrieving data from a huge corpus of Wikipedia Indices.

Meme of the week 😌

Off-topic reads/watches 🧗

☕️ Measuring and Reporting Extraction Yield deep dives into the mindboggling maths behind measuring how to achieve the ideal extraction in your coffee concoction to get that perfect balance of flavor.

🎁 As the holiday season nears, here’s The Ken's 2024 Gifting Guide curated from nearly 500 responses received from The Ken’s subscribers regarding what they thought about gifting along with their philosophies, budgets, and emotions. Plus, most of the gifts mentioned here are not season-specific so you can just keep it handy all year round.

🥱 Be Bored and stop adding more and more stimulations to your life, this shall fix your fun hierarchy. That’s the only way to outwork most of the folks in 2024.

😫 On whining by Seth. He discusses how whining is a feature when limited and a bug when done excessively and says verbatim, “There’s a difference between, “he’s whining,” and “he’s a whiner”. We can do the first and avoid the second.

Wisdom Bits 👀

“It doesn't matter what we want, once we get it, then we want something else.”

- Lord Baelish

― George RR. Martin

Wallpaper of the week 🌁

🌌 Grab the week’s wallpaper at wow.nibbles.dev.

Weekly Standup 🫠

Nibbler A is on a temple run using trains in northern India. He has not seen good internet for a week and is looking forward to better internet speeds in the coming days.

Nibbler P has had a week full of work, movies, and exhausting gym sessions. He’d be on the move for the next two weeks and is expecting worse internet speeds in the coming days.

If you liked what you just read, recommend us to a friend who’d love this too 👇🏻