#88

Amazon Nova, Llama 3.3, Gemini-exp, OpenAI's 12 Days, Vitalik's ideal Wallet, React 19, Rainbow illusion, ADHD apps, Cherry Studio, Comma Operator, EVs eat Copper, EveryUUID.com and scrapybara & more

👋🏻 Welcome to the 88th!

A little delay but hey, we are here to cure your blues.

📰 Read #88 on Substack for the best formatting

🎧 You can also listen to the podcast version of Powered by NotebookLM

What’s happening 📰

✨ AGI Digest

🌊 Meta plans to build a 40k+ km long $10B subsea cable spanning the world. This plan is still in its early stages and no physical assets have been laid out as of now. Since Meta would be the sole owner of this project, it when completed would give Meta a dedicated pipe for data traffic around the world.

⚓️ Model Drops:

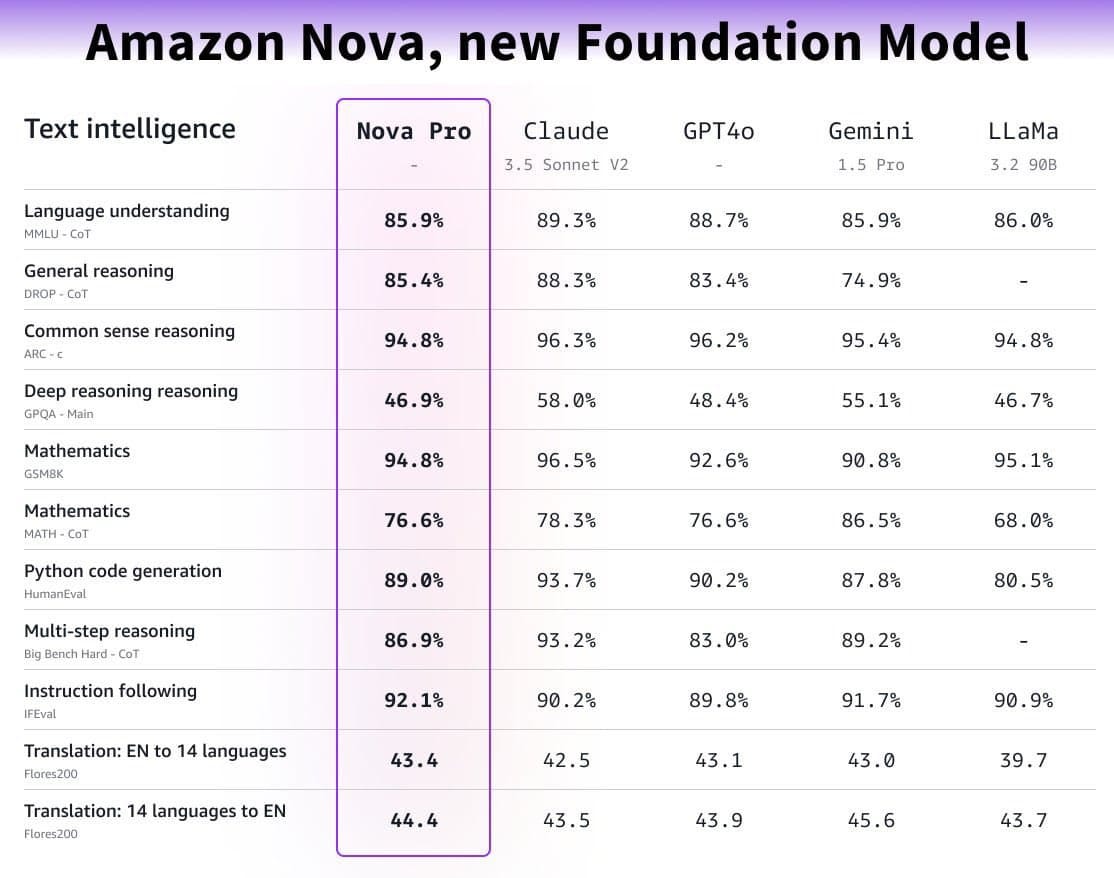

🗞️ Amazon (after its failed try at Titan series last year) released Nova, its family of foundation models that’s competent with the frontier models like GPT-4o, Gemini 1.5 Pro and Claude 3.5 Sonnet. The family includes Amazon Nova Micro — a small and cheap text-only model, Amazon Nova Lite — a low-cost and fast multimodal model, Amazon Nova Pro — a medium-sized multimodal model with the best combination of accuracy, speed, and cost and Amazon Nova Premier — their most capable multimodal model for complex reasoning that’ll be available in Q1 2025. They also released Amazon Nova Canvas — a text-to-image generation model and Amazon Nova Reel — a text-to-video generation model. Whew, that’s a lot for a release! All of these models are available on AWS Bedrock for inferencing right now.

Source: @ns123abc on Twitter 🤯 Meta released Llama 3.3 70B which blows away its previous Llama 3.1 405B model like dandelion parachutes in the wind. This is a big moment because by using a new alignment process and improvements in their online RL techniques, they were able to compress the intelligence level of a ~5.7x larger frontier model into a much smaller model that can run at a fraction of inference speeds.

Source: @AIatMeta on Twitter 🌕 Moondream released Moondrem 0.5B, the smallest performant VLM that’s tiny enough to run on edge devices. A 8-bit quantization, it requires a mere 996 MiB of memory to run. And at 4-bit, it only requires a runtime memory of 816 MiB. Like its elder 2B sibling, the 0.5B model also has its weights openly available.

🗣️ The team at Pleias released Pleias 1.0, a family of 350M, 1.2B, and 3B models plus two additional models Pleias-Pico (350M) and Pleias-Nano (1.2B) which are specialized for multilingual RAG that surpass several other models larger than their size. The model family focuses on being performant while being small enough to run on a CPU. Another interesting thing about this release is that Pleias says these are the first-ever models trained exclusively on open data (non-copyrighted / published under a permissible license) and the first fully EU AI Act-compliant models.

❄️ Snowflake released Arctic Embed L and M 2.0 embedding models trained on multilingual data to support English, French, Spanish, Italian, and German, competing head-to-head with OpenAI’s Text Embedding 3 Large, without compromising on English quality (which is usually typical of other multilingual embedding models). Talking about multilingual embeddings, JinaAI released their multilingual + multimodal Jina Clip v2 embeddings a couple of weeks ago.

💭 Following suit from DeepSeek and Qwen, the Chinese company InternLM released a reasoning model named InternThinker with long-term thinking, self-reflection, and correction during reasoning. Internally, InternThinker is a small model distilled from a large model’s CoT results.

⛵️ Bytedance (parent of TikTok) released Sailor2, a family of LLMs (0.8B, 8B, and 20B) with SoTA multilingual language understanding specializing in South-East Asian (SEA) languages with complete Apache 2.0 License.

🚢 Google DeepMind Ships:

♊️ The Gemini team released Gemini-exp-1206 (which many suggest might be the new Gemini 2.0 Flash [1] [2], which is insane if true) which decimates all other models on the popular benchmarks. In our testing too, we feel it has gotten significantly better at understanding complex prompts and at coding, sometimes even solving problems in one-shot that took Sonet and 4o a few rounds of back and forth.

🧞♂️ Google DeepMind introduced Genie 2, a large-scale foundation world model capable of generating an endless variety of action-controllable, playable 3D environments for training and evaluating embodied AI agents, which is a leap forward from the previous Genie 1 which was limited to generating 2D worlds. It is capable of object interactions, complex character animation, physics, and the ability to model and thus predict the behaviour of other agents.

🌤️ They also introduced GenCast, an AI ensemble model which predicts weather and the risks of extreme conditions with SoTA accuracy. Partnering with weather agencies, this would lead to a lot better forecasting and a lot of economic value saved with better predictions.

🎥 Veo, Google’s Video Generation model is now available on Vertex AI in private preview, along with Imagen 3, their image generation model. Looks like someone is gearing up for the upcoming OpenAI Sora releases.

👁️ The Gemma team released PaliGemma 2, which updates the old PaliGemma’s decoder with the new Gemma 2. It comes in sizes of 3B, 10B, and 28B models.

🎁 NotebookLM partnered with Spotify to launch “Spotify Wrapped AI podcast“ which uses Google’s NotebookLM Audio Overview to create a podcast discussing and directing your year in music.

⭕️ 12 Days of OpenAI: Day 1 and Day 2

OpenAI is doing 12 Days of OpenAI where for the next 12 weekdays (2 already done), they’d be announcing big and small updates about the stuff they had been working on.🧠 Day 1: o1, their reasoning model is out of preview and is faster, concise and smarter (really tho?) and supports image uploads. They also introduced a $200/mo ChatGPT Pro plan with unlimited access to all models plus access to an “o1 pro mode“ that uses a version of o1 that uses more compute to think harder and provide even better answers to the hardest problems. This is meant for users who need cutting-edge research-grade intelligence in their day-to-day work.

🎚️ Day 2: OpenAI announced Reinforcement Learning fine-tuning that allows users to fine-tune models for specific, complex tasks in domains such as coding, scientific research, or finance.

Misc:

🎖️ Cohere released its Rerank 3.5 endpoint with an enhanced understanding of complex user queries and multilingual support for 100+ languages along with the ability to search long documents with rich and relevant metadata (e.g. emails, reports), semi-structured data (e.g. tables, JSON), and code.

🔎 Exa introduced Websets (currently in waitlist), a way to find a comprehensive set of anything you want from the web using AI.

🔐 0x Digest

👛 Vitalik wrote "What I would love to see in a wallet", which makes this an officially important problem to solve for the rest of the crypto bros too. He talks about how the L2 interactions, privacy, deep security, 2FA, and more things should be in-built parts of the wallets.

⏳ Balajis tweeted about how this is the best time to be in crypto and how someone should take the lead and become fully anonymous life. He says one should become Zatoshi to bring out ZEthereum. And unlike zk tech on Ethereum which is mainly to solve scaling issues, he mentioned that this is for addressing privacy needs.

📱 Coinbase launched Apple Pay for fiat-to-crypto purchases via Coinbase Onramp. This solves a big part of the equation, it just got easier to onboard new users. Support for Apple Pay unlocks the next million users who would’ve otherwise not bought crypto. Oh! and also Base confirmed there is no plan for Base Network token and they’d rather keep building useful things.

📈 Last week Bitcoin reached a great milestone of $100k but reports are signaling a 30% pullback soon. And while the crypto Twitter ain’t all bullish on Ethereum (trade-wise), the legendary CryptoLark says ETH is going to $8k and there is no stopping.

🗺️ Crypto x Countries

🇺🇸 Earlier this month, the US Govt moved

some19,800 BTC (worth ~$2B) linked to Silk Road to Coinbase Prime. For what? Of course with the intent to liquidate. And probably they did sell it at ~$100k, as there was a dip post that.🇫🇷 The French Senate has proposed an “unproductive wealth tax”: which would include unrealized gains from digital assets like Bitcoin. This proposal aims to tax dormant assets. However, this is not yet final legislation, indicating it is in the early stages of policy development.

📝 Interesting proposals to checkout

EIP-7830: Contract size limit increase for EOF, raising the limit for only EOF contracts to 64 KiB from 24KiB.

EIP-7833: Scheduled function calls, by adding a new opcode

OFFERCALL(personally not sure if this would be a good idea)EIP-7831: Multi-Chain Addressing by @samwilsn. This proposes chain-specific address format that allows specifying both an account and the chain on which that account intends to transact. Eg:

nibbles.eth:optimism

🛠️ Dev & Design Digest

🖱️ The Browser Company released a recruiting video for their new browser "Dia". They are looking for gigachad systems, AI, and infra engineers to help them build this new vision of the browser. They say out loud that "AI won’t exist as an app. Or a button. We believe it’ll be an entirely new environment — built on top of a web browser". But but.. what happens to Arc? Well, they won't abandon it.

🌐 In all its glory and stability, React 19 was released earlier this week. We’re yet to try it firsthand, but in short, it’s a piece of good news to have a stable release for a major version. The major things that improved from the last RC1 were mainly

React Suspense performance

is not suspense anymoreimprovement, truth be told most of the time you just make things fast by doing lazy delegationOur favorites:

refcan now be passed as a prop (bye-byeforwardRef),useFormStatus,useTransition,useOptimistic, and some more hooks to flip over the business of many libraries.

🧊 Adam from Pulumi wrote about how we have a Cursed Container Iceberg in the name of hosting things over the internet, and how literally from mobile phones to big-ass machines, we are leveraging 105+ options to host and deploy containers.

Source: Pulumi Blog

What brings us to awe 😳

💫 The coming year, 2025 (45²), might be the only perfect square year most of us will live in. (not you Bryan Johnson) [found when doom-scrolling]

🎯 Kilian wrote about “Where autofocus shines”, to set the context, the

autofocusAPI is tricky and often produces unexpected behaviors, as the page gets more complex. But there is one place where it shines and that’s what the article talks about, “Single purpose, Single form pages”.🫀Even in 2003, there were between 50 and 100 people in the US who still had nuclear-powered pacemakers. It was Plutonium Powered Pacemaker using thermoelectric batteries, that’ll charge from the decay. (if this wasn’t a sensitive topic, would’ve said Arc Rector goes brr..)

💥 Nolen, the one million checkboxes guy is back with another project EveryUUID.com (bro got some serious domain shopping going on). Just like the previous one, this is not an easy problem but hey Nolen was struggling to remember all of the UUIDs. Alright, he had to handle rendering all by himself, on top of that, he went all the way to add some entropy, ordering, and search to it. He wrote down a neat article explaining “Writing down every UUID”.

Today I (we) Learnt 📑

🌈 In case you were feeling “Oh I know this thing”, Derek has some bad news for

allmost of us. Rainbows are more than what you (might) think they are personalized optical illusions for all of us. How much personalized? Well, no two people see the same rainbow, even your left and right eyes don’t see the same rainbow. Also, it’s not enough to say that “white light scattering” produces a rainbow. He also explains things like why you can’t see a rainbow when looking in the direction of the sun & why the sky is darker on top of the rainbow🥫Fred Baur, a chemist for Proctor & Gamble in Cincinnati and inventor of the familiar “Pringles” potato chip can. He was so proud of his design that he requested that his ashes be buried in one of the tall, circular Pringles cans. Or as he said, “I want to be buried in one of my creations”.

🪙 On average, an EV contains approximately 83 kg of copper, ~4x that of a traditional internal combustion vehicle. So, buy the copper stocks anon. [Source: The power of Copper in EVs]

🫨 JavaScript has this Comma Operator that evaluates each of its operands (from L to R) and returns the value of the last operand. What’s fascinating about this operator can be used for stuff like incrementing multiple values together in a for loop, or discarding reference binding, or running it in safe eval mode. I thought it was not designed to do

this(pun intended) until I came across this “comma operator trick”.

🤝 You have read ~50% of Nibble, the following section brings tools out from the wild.

What we have been trying 🔖

🪷 Apps for ADHD: Best, hand-picked, and game-changing apps, that the ADHD community has tested to help you focus better, work smarter, and stress less.

🤖 ai.pydantic.dev: A Python Agent Framework by Pydantic designed to make it easier to call LLMs and run agentic workflows along with Pydantic's structured validation.

🎱 Eightify: A chrome extension that summarizes YouTube videos using AI, giving you TL;DW of the video.

🖥️ scrapybara: a platform that provides instant, secure virtual desktop environments via API for AI agents to perform tasks. Since it’s not recommended to run agents on your machines. (give them extra marks for the cute name.)

Builders’ Nest 🛠️

💽 slatedb: A cloud-native embedded storage engine built on object storage.

🍒 cherry-studio: a cross-platform desktop client supporting multiple LLMs with document and data processing, AI assistants, and more features.

⚒️ es-toolkit: JS utility library, which is ~3x faster and up to 97% smaller — a major upgrade to lodash.

✨ lumen: AI-generated commit message, Git changes summary from the CLI, without API keys.

Meme of the week 😌

Off-topic reads/watches 🧗

🏁 You’ve already failed by Seth Godin addressing how “any effort we spend on controlling the uncontrollable bits is wasted”

👏 Making it Happen vs. Letting it Happen by Jason Fried, talks about how you don't always have to push the ball uphill (making it happen) and should trust the machine you have built to do the rest (letting it happen). It's loosely analogous to explore and exploit methodology.

💼 How to Get a Job Like Mine by Aaron Swartz talks about his journey into the world of tech and the importance of curiosity, saying yes, and taking initiatives that led him to live the stressful but fulfilling life he lived.

📕 Facebook’s Little Red Book is an internal book distributed within Facebook that has Facebook’s ethos—breaking things, thinking big, and moving fast—distilled into a manifesto. More than a handbook, it was a declaration of identity, solving the problem of scaling culture during explosive growth for its then employees.

Wisdom Bits 👀

“Stand for something or you will fall for anything.”

― Rosa Parks

Wallpaper of the week 🌁

🌌 Grab the week’s wallpaper at wow.nibbles.dev.

Weekly Standup 🫠

Nibbler A is finally² back home this time, he was mostly busy mid-week in some events, caught up with his anime list as the weekend came closer, tried to solve the missed AoC problems, and was found cheering Gukesh for WCC.

Nibbler P is back home this week as well, after two weeks of moving around. He did some reading, watched a couple of movies, tried his hand at golf, got a little inebriated, and ran his third-best 5k.

If you have 2 minutes, you can fill out a feedback form here. This would help us make The Nibble even better for you and other readers out there!

If you liked what you just read, recommend us to a friend who’d love this too 👇🏻

release candidate