#96 — OAI on "wrong side of history", Superglue brings React to Rails, Waiter at Denny's talks about GPUs and future

o3-mini, Humanity’s Last Exam, DeepSeek Janus Pro, RIP-7859, ai16z rebrands, Sui lands on Phantom, Nest 11, JS Temporal, Electron w/ Native, Leap smearing, cs16.css, LocalSend, Be in charge & more

👋🏻 Welcome to 96th! This week, we are experimenting a little with the format. Let us know how you like this!

96, an abundant number, just like the news we have seen this week.

📰 Read #96 on Substack for the best formatting

🎧 You can also listen to the podcast version of Powered by NotebookLM

Psst… if you have a second to spare and you really want The Nibble to improve, let us know your thoughts by filling out a small questionnaire. We’ll reinforce the good parts and weed out the bad ones. 💪

Now onto the edition…

What’s Happening 📰

🪦 To start on a positive note (*coughs*), the “Doomsday clock” is now set to 89 seconds to midnight, the closest it has ever been to midnight. The clock was set to 7 minutes to midnight in 1947 and midnight symbolizes the destruction of humanity. Yeah! And of course, AI is one of the big factors along with geopolitical tension and nuclear weapons in the hands of so-called “leaders”.

🌥️ And hey, software engineers have been keeping their own Doomsday clock which moved when Xuan-Son Nguyen raised a Pull Request on llama.cpp: “ggml : x2 speed for WASM by optimizing SIMD”, written mostly by DeepSeek-R1. (it’s joever?) Maybe it's time to reclaim your role.

And while you can add “fucking” to your search query to neutralize the AI summaries (talk about well-mannered AIs, as they can’t repeat curse words), maybe it’s time to learn to respect these agents (you know “just in case”).

✨ AGI Digest

💭 OpenAI, DeepSeek, and the race to think harder (& better?)

🤏 Following some vagueposting from its employees, speculations from the community, and competition from China, OpenAI released o3-mini — the next generation of its small & fast reasoning models and Deep Research — a search & research agent built on top of o3 (not o3-mini!).

They also did a Reddit AMA answering some of the popular queries including releasing the missing image generation in 4o (!!!), tool-use and retrieval for reasoning models, displaying the model’s CoT tokens (which might be teased “one-more-thing” feature; maybe they’ll keep it for Pro users only but all credits to DS R1 for prodding them in that direction), and possibly doing more open-source work. Also, they revealed that they used r/ChangeMyView to train o3-mini with the “best human reasoning data available on the face of the Earth”. (guess, who just got treats being the early investor in Reddit → Sama)

o3-mini is available to all ChatGPT Users (unlimited for Pro, 150 messages/day for Plus, and a few1 messages/day for Free) and in the API for users in tier 3-5. With $1.1/M input tokens and $4.4/M output tokens, it is a lot cheaper than o1-mini while also being much faster at inference and of course more intelligent!

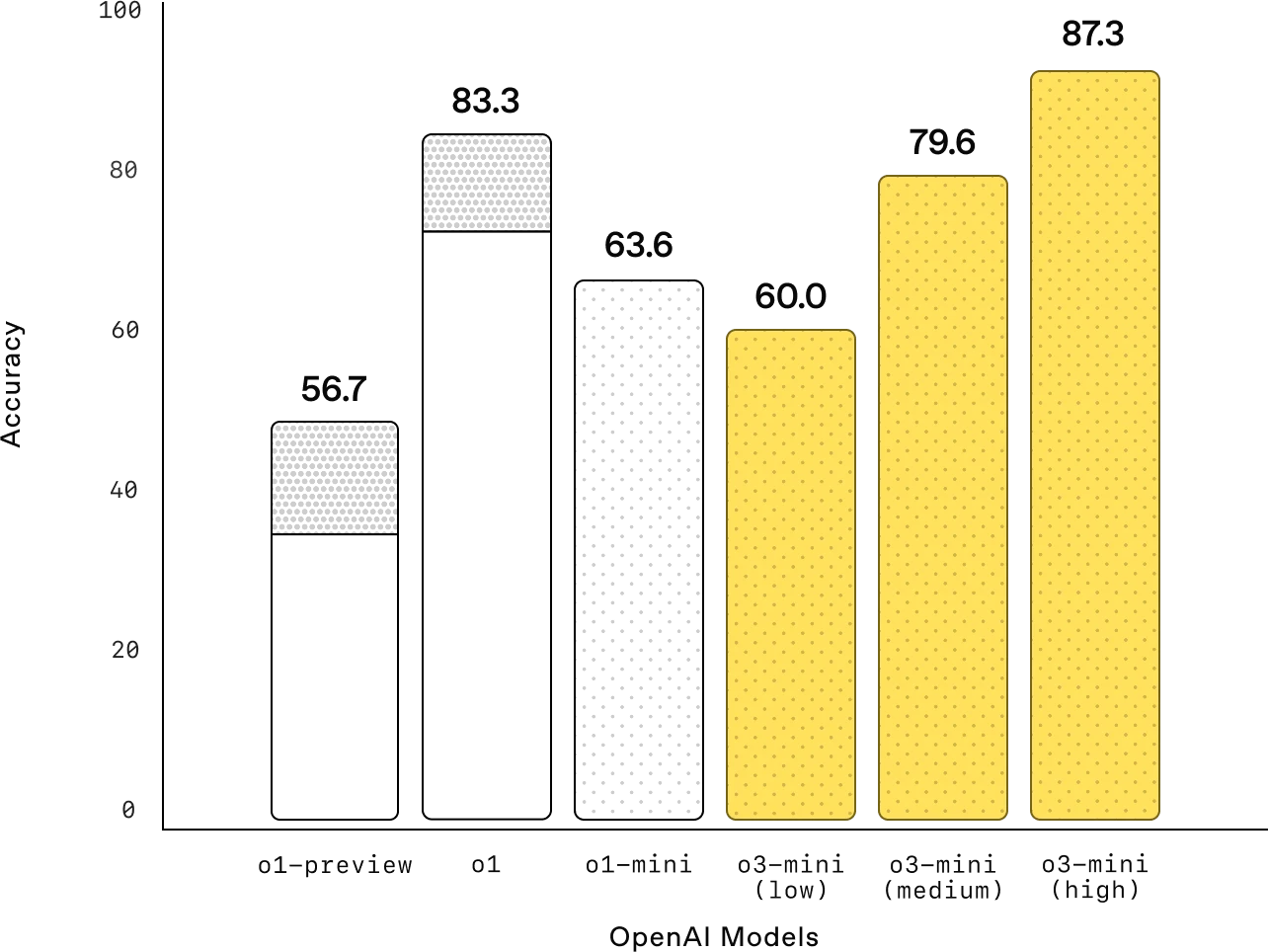

The model can be used with low, medium, or high reasoning effort. It is already performant with o1-mini on low and matches o1 on medium-high effort. The reasoning_effort parameter can control this effort in the API. Sadly, the model's knowledge cutoff is only until October 2023, making it a huge bummer for using recent dev tools. We guess it won’t increase anytime soon, given how adamant OpenAI employees are about using it with Search instead. People are already comparing it against DeepSeek R1 and from what we and others have tested there is no one clear winning model between the two. o3-mini surely performs a lot better on high reasoning effort but that comes at large API cost.

📝 Deep Research (wonder where they got the name inspiration from while taking a dig on DeepSeek) meanwhile is a search agent that conducts multi-step research on the internet as well as the user-uploaded files, with access to a Python tool for analysis. It is trained on hard browsing and reasoning tasks across a range of domains and already achieves more than 25% accuracy on the recently released Humanity’s Last Exam benchmark — a broad academic benchmark with broad subject coverage. Currently available only for Pro, it will be rolling out to the Plus and Team tier soon.

😳 Meanwhile, DeepSeek is getting popular among developers primarily because of its low costs and is already being used extensively, such as the earlier mentioned PR of a developer using DeepSeek R1 to write 90% of this code to optimize SIMD in llama.cpp. Unsloth has even released a 1.58-bit quantized version of DeepSeek R1, compressing it 80% to be only 131GB while retaining much of its reasoning via dynamic quantization!

But popularity also comes at a cost. Last week of Jan, DeepSeek servers were hit by DDoS for three days totaling the network traffic of the entire Europe! And with the US already eyeing a bill that would prohibit anybody in the US from even using Chinese OSS (which would be devastating), providers like Perplexity, Fireworks, and Together are deploying DeepSeek models on their own American Servers to keep both the traffic and the data within the borders. India is also waking up to the idea of having a stronger self-sustained AI power, and we are seeing more government and community initiatives about it.

🫵 Meanwhile, OpenAI is still accusing DeepSeek of stealing its output to improve its models, which is a classic case of “the pot calling the kettle black”.

⚓️ New Model Drops

🧧 Even with last week's Chinese New Year, the Sino bros kept shipping. We got DeepSeek Janus Pro 7B — a unified multimodal model with native support for both image and text inputs as well as outputs, an improvement over their previous Janus-1.3B model. It improves over earlier approaches by decoupling the visual encoding from text encoding into separate space, while still utilizing a single unified transformer architecture for processing.

👀 After Qwen Max and Qwen Turbo, the Qwen folks released Qwen 2.5 VL, the next iteration of their VL, in 3B, 7B, and 72B model sizes while again establishing themselves as the top-performing OSS Vision LLM family.

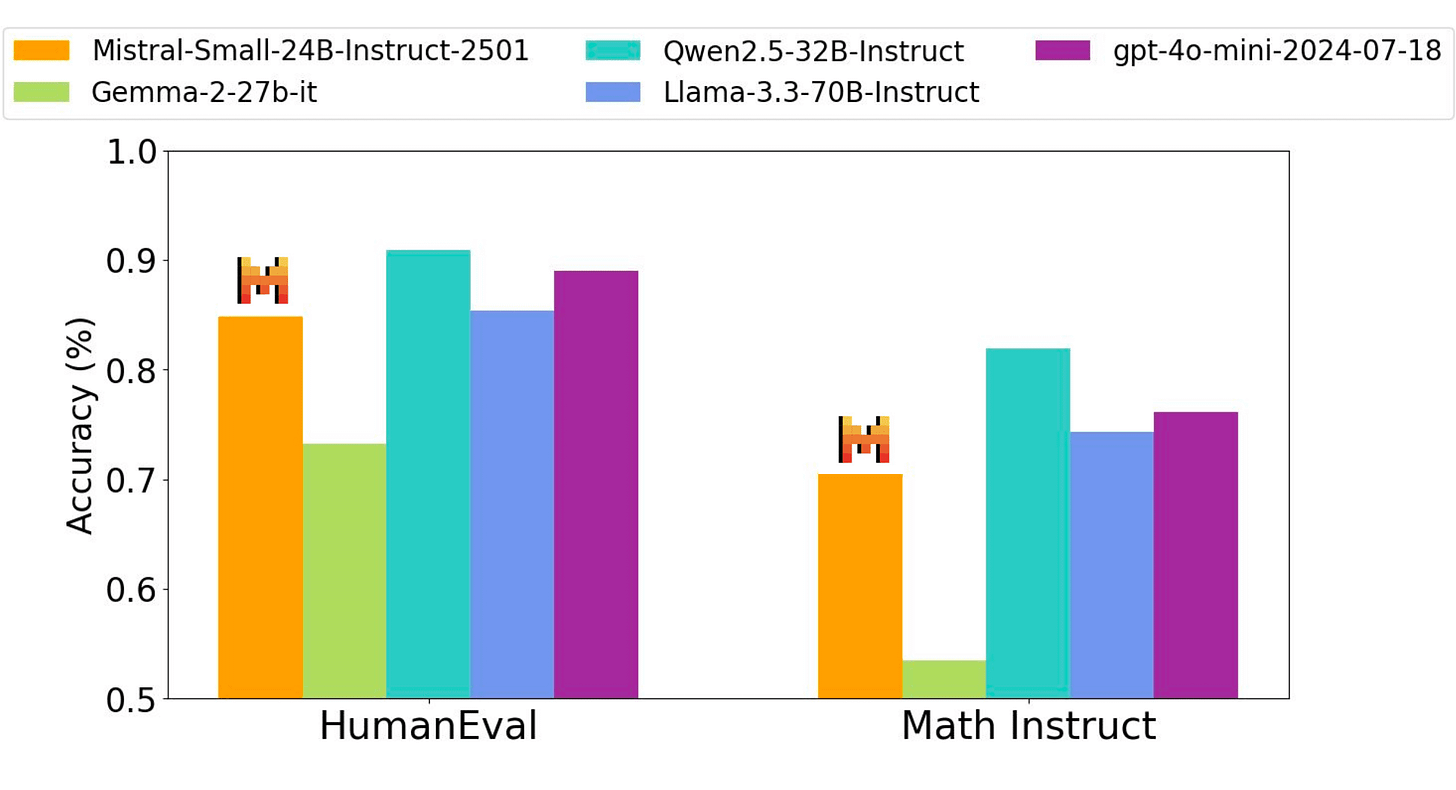

🎐 France’s Mistral in their signature move released Mistral Small 3, reaffirming their stance on Open-Source by using Apache 2.0 license and promising to progressively move away from their previous MRL license. Mistral Small 3 is a strong 24B model that punches above its weight with speed and cost efficiency. Mistral hosts it for $0.1/M input tokens and $0.3/M output tokens with ~140 tok/s latency.

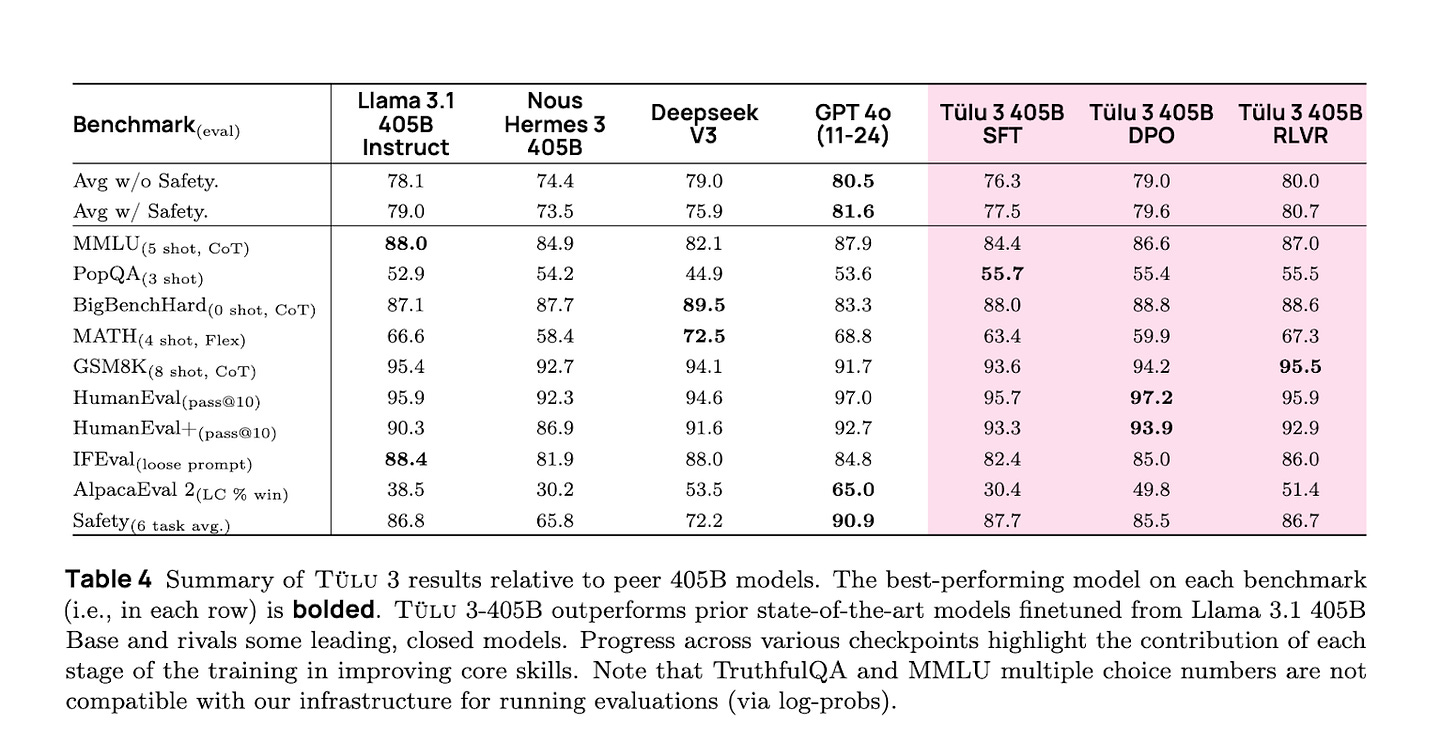

✅ AllenAI added Tülu 3 405B to its Tülu 3 family which competes with both DeepSeek V3 and GPT-4o. They use their fully open post-training recipe, most remarkably the Reinforcement Learning with Verifiable Rewards (RLVR) — a new approach to train language models on tasks with verifiable outcomes such as mathematical problem-solving and instruction following which significantly improved MATH performance.

📦 And some more developments

🌟 Nous Research announced Psyche, an infrastructure to democratize and decentralize training. It is built using Nous’ DisTRo optimizers on the Solana Blockchain to coordinate heterogeneous compute (4090s, A100s, H100s) to join together and train models to be fault-tolerant and censorship-resistant.

Starting with an initial Phase 0 on the testnet, they would refine the system to make it fault-tolerant while improving efficiency and rewarding the GPU providers appropriately. While they have achieved distributed pre-training of a 15B model before using DisTrO, running it trustlessly on a blockchain on scale would be a completely new feat.

🤗 You can now directly call supported Inference Providers on HuggingFace of different models from their model page as well as via the HF API.

External inference providers, starting with fal, Replicate, Sambanova, and Together AI, are integrated into both the client SDK and the website. You can either set your own API keys of the provider or use them routed through HF using its own credits.

🔐 0x Digest

💪 With Interoperability being the theme for this

yearquarter (nothing lasts for a year in markets) in crypto, a wild RIP-7859, Rollup Improvement Proposal was dropped, proposing an overhaul to “Expose L1 origin information inside the L2 execution environment”.

The idea is to make sure L2s can access the most recent block data from Layer 1 somehow, but it’s still in the discussion phase on how are we going to do it. A major microservices vibe is going on in the L1 and L2 space, all we can see is two services calling each in a private subnet or using some relay.

🛟 In “Never a dull day in Crypto”, so hardware wallets keep your monies safe, don’t they? Well, one of (they have eight) the co-founders of Ledger, David Balland, and his wife were kidnapped and rescued after a police operation involving 90 officers.

Post rescue, it was found that his hand had been mutilated, which just re-affirms our beliefs that biometric ledgers and Passkeys are safe as long as the kidnappers want them to be 😭.

👨🏫 Former SEC Chair Gary Gensler has returned to MIT to teach AI and Finance. For Context: Gensler has done his fair share of mischief in making stringent regulations around crypto.

And Tyler, co-founder of Gemini, said out loud that they will not hire any graduates from MIT, not even interns as long as Gensler is associated with them. Talk about being salty 😭

Well, while we’re talking about unreasonable shitty regulations on crypto, we are pleased to inform you that Indian crypto holders face a 70% tax penalty on undisclosed gains under Section 158B. (cc: Homies)

🤝 The integrations club is busy as usual 👇

💧 Sui is now available on Phantom. I’ve just heard good things about both Solana and Sui doing a few things right that Ethereum wasn’t doing.

This unlocks a big market for Sui as 15M users of Phantom (the leading Solana wallet) now can easily play around with Sui.

$ Aptos gets Native USDC by Circle, so no more circling (bridging) assets, all hail interoperability.

This is huge as I think crypto as a community underestimated the impact of “centralized entity-backed stablecoins in revolutionizing the community said to believe in decentralization” 🙂

🖋️ The renaming club weren’t sleeping this week, ai16z officially rebrands to ElizaOS, because you know it was confusing for “a venture capital firm in Silicon Valley” to differentiate.

And well, Chris Dixon requested them to change their name as they became famous. And yeah for the traders in the mailbox, the ticker remains the same for now $ai16z is still how they roll baby!!!

🛠️ Dev & Design Digest

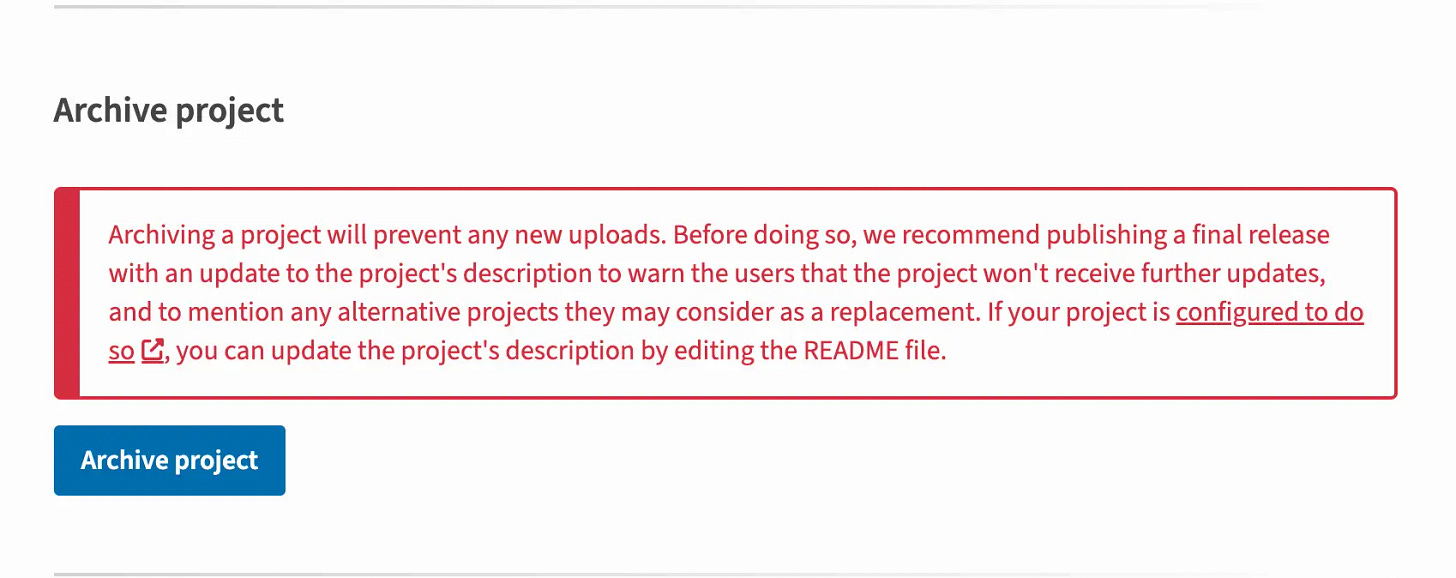

🗂️ PyPi adds support for archiving a package. This is aimed to be a way for maintainers to signal that they are not deleting the project from index, but don’t expect any new releases on this package and in turn decide carefully whether you do want to depend on this package.

One thing that they don’t tell you outright is, that you need to have 2FA to access “Your Projects”. I went it to archive a project and ended up doing the “TOTP ritual 🤡” (imagine the unamused face)

⚡Felix, Co-Maintainer of Electron, was sick of people having misconceptions about Electron and went ahead and wrote an article “Things people get wrong about Electron”, addressing few of the concerns like Electron ain’t native, obese bundles, web apps are bad and more.

And before people could say more shit about the piece of software he is working on for over a decade he slapped them with this repository containing examples of Native UI with Electron and told them how it’s done.

💞 Superglue, the love affair between React and Rails is now (on track) v1.0. Superglue is a library designed to make building interactive Rails and React applications as productive as classic Rails Stack apps (also it is a fork of Turbolinks 3). The author of the library Johny Ho (not to be confused with the co-founder of Perplexity) has been working on Superglue for over a decade and has seen many attempts trying to glue Rails with React, but hey!!! integrations aren’t simple.

Enough talking what does Superglue bring to the table?

Well, it relies heavily on Rails to do the server-side rendering in its way, breaking the classic

foobar.html.erbinto three parts.json.props,.jsand.html.erb. But instead of sending the whole 2 file, it just sends the props.Leverages the oldie Rails UJS to allow creating dynamic interactions such as modals and pagination, without full page reloads.

It supports Server Side Rendering with its Humid Components. For complex state applications, it provides a way to integrate Redux (Big & true)

⌛ JavaScript Temporal is coming to the browsers near you soon. If you have ever used Date/Time in JavaScript you probably know that the native Date mostly sucks and you use libraries

moment,luxon, or their distant cousins to handle dates (urge to say I don’t do Dates?).

The Temporal adds support for time zone and calendar representations, many built-in methods for conversions, comparisons and computations, formatting, and more, over 200 utilities functions and counting.

🦊 NestJS announced the release of Nest 11, and just like any other major update, this one comes with a bunch of treats, too, we’ll highlight a few important ones.

The Logger now supports JSON logging.

Microservices transporters get a new method

unwrap, allowing devs to interact directly with the underlying client.They have improved the startup time by revamping the module's opaque key generation process and have updated underlying server frameworks like Express and Fastify to v5.

🤝 You have read ~50% (well, we don’t know how much, tbh) of Nibble, the following section brings some fun stuff and tools out from the wild.

What Brings Us T(w)o Awe 😳

⏲️ Rachel By The Bay shares how they smeared the “leap second” at their organization (Facebook, we guess?) back in 2015 by intentionally pushing the whole company into the past. This sorcery is rather a common affair now, major companies maintaining NTP Servers smear the leap second, instead of adding it right at the moment.

🐇 Here’s a Rabbithole for you: What harm does a leap second cause on a Unix system?

😔 Why Applications are OS Specific? by Core Dumped, a quick and small video telling why System Calls design decisions taken by Windows and UNIX create this decades-old problem of having to run OS-specific programs. (*sad noises of all those who want easy ways to play games on Mac*). TL;DR → Sys calls, registers, sys call table, parameters in sys call, and a lot more matters.

💰 Since Jira had some "issues" (pun intended), Here's a quick walkthrough by Arpit on How Jira moved from JSON to Protobuf and ended up saving 55% of the cost of their Memcached. Since Protobuf is smaller they got 80% savings on their payload sizes, 75% fewer CPU cycles, and of course better serde5 than almighty JSON.

💫 Samuel built a CSS library based on the Counter-Strike 1.6 UI, which we call cs16.css. We think this, along with win95.css, is all we need to accelerate down memory lane.

Builders’ Nest 🛠️

🎛️ evo: a modern, offline-first version control system that focuses on what matters most: helping developers write great code together. No more merge conflicts that make you want to quit programming. No more branch structures that look like abstract art. Just a clean, intuitive version control that works.

🗺️ jsvectormap: A lightweight JS library for creating interactive maps and pretty data visualization.

🪗 llm-compressor: Transformers-compatible library for applying various compression algorithms to LLMs for optimized deployment with vLLM.

📦 LocalSend: Free, open-sourced app that allows you to securely share files and messages with nearby devices over your local network without needing an internet connection. Like an OSS Airdrop.

Meme of The Week 😌

Off-topic Reads/Watches 🧗

⌛ Jensen’s Vision for the Future, an interview with Cleo. They discuss how NVIDIA started, what problems they were solving, what led to the creation of the almighty CUDA, and of course how NVIDIA is driving the AI boom. Jensen talks about how they reinvented modern computing and how the next decade is all about Applied AI, Omniverse (with Cosmos), self-driving cars, Biotech, Climate Science, and whatnot.

📱 People don’t like using technology, an old essay by Paras Chopra (we stumbled upon on Twitter) reminding all of us that “People care about their problems” and maybe the fancy piece of tech you built is not as pleasing to them.

🫡 To be in charge by Seth, talks about why one should take initiative and act as if they are "in charge" even when it feels uncomfortable.

🧠 A great piece by The Common Reader explaining how “Genius is not age-bound” and also, why Fields medals are given to people below 40.

Wisdom Bits 👀

“Perfection is achieved, not when there is nothing more to add, but when there is nothing left to take away.”

― Antoine de Saint-Exupéry, Airman's Odyssey

Wallpaper of The Week 🌁

🌌 Grab the week’s wallpaper at wow.nibbles.dev.

Weekly Standup 🫠

Nibbler A had a looooong week of revamping some things at home, marking the full week with nil screen time (but hey! over 15k steps daily), and then crying on the pile of emails on Sunday night. (hence the delays)

Nibbler P had a work-filled week that ended with battling zombies, exploring Midgard, and riding through feudal Japan on his new gaming machine while maintaining his weekly workout streak.

If you have two minutes, please complete a feedback form here. Your input will help us improve “The Nibble” for you and other readers!

of course, we know what they meant by few, here you go.

Embedded Ruby File